How to Perform Geo Targeted Testing

Current testing practices were established before cross-device behavior was prevalent, making it necessary to reconsider how we do A/B testing.

When a user enters an A/B test on one device, then visits again on another, they are likely to be assigned another variation of the test. This is what’s called a “mixed experience,” and mixed experiences hurt A/B test results.

This situation may appear to be obvious, but is seldom considered in testing. When we consider what it means, and how prolific cross-device usage is across a user’s experience, we begin to realize how challenging (or even fruitless) it is to do A/B testing the way many organizations perform them today.

Causes of Mixed Experiences

As mentioned, changing devices during a user’s experience with a site is a major cause of users having a mixed experience, but there are more. Here is a short list of the reasons users have a mixed experience.

- Switching devices

- Switching browsers on the same device

- Clearing cookies for a site or a browser

- Purchase of a new device

- A test is turned on between visits

- A test is turned off between visits

No. 5 and No. 6 should never happen, and to understand why you can read “The “Test Window” Methodology for Conversion Optimization Testing.”

How Bad is it?

We have more than 100 A/B tests running at any time, so trust us when we say this is a pretty big problem. In fact, unless you handle it, or highly factor in the reality of it, it may not be worth testing at all unless you are REALLY patient and analytical with the results.

In my book, “Stop Wasting Your Time When Testing eCommerce Sites: How to Get the Most Accurate Tested Results” this issue is referred to as the “elephant in the room.” However, under certain and common test conditions, this elephant can be well handled.

The challenge is figuring out how bad “mixed experience” really is for your site. There are no real metrics available, unfortunately, and it really depends on your site, which is what matters most after all.

What Google Says

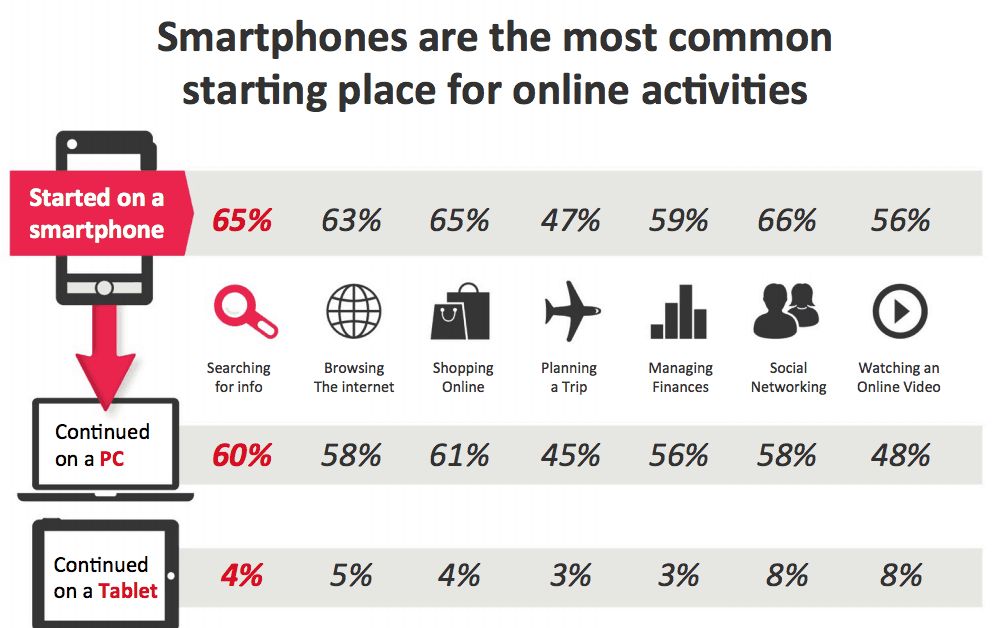

Even way back in 2012, Google’s “New Multi-Screen World” Report (still the most recent comprehensive study on the subject) states that 65 percent of all eCommerce experiences start on a mobile device, with 61 percent of those people switching over to a laptop (and another 4 percent switching over to tablet).

Now think about how that could affect an A/B test being conducted across devices, such as a price, an offer or a promotion test.

How to Gauge the Issue on Your Site

Each eCommerce site has this problem; it just varies by the degree they are impacted. If you are like most eCommerce site owners, you are busy and so are your development resources. To make a bit of time, I suggest stop testing and making “improvements” to your site, and start working on how to better understand, track and test in the “multi-screen world.” This is, after all, the year of mobile (for the seventh year in a row), so it’s about time.

Start With Polls

Right now, the only way to gauge cross-device usage of your site is to ask your users. To do this, you will want to set up two polls:

- Pre-Purchase Device Poll

- The purpose of this poll is to find out how many “new” visitors to your laptop site are actually not new at all, but rather are returning to the site after being on another device.

- This poll will greet your users when they arrive on the site and ask them, “Have you visited us on another device or browser recently?” with the answer options being “yes” or “no.”

- Your poll should track people from this point on and integrate with your site analytics so you can see their behavior from this point on (this data is worth gold, so roll up your sleeves and start planning).

- Post-Purchase Device Poll

- The purpose of this second poll is to find out what percentage of cross-device/browser users are made up of purchasers (i.e., 23 percent is what we find to be an average across the eCommerce sites we have polled recently).

- Show this poll on the confirmation page of the site.

- Ask the users the similar question, “Did today’s purchase involve more than one device or browser?”

- Compare Polls

- Now that you have gauged the percentage of people who possibly arrive at the site following use of another device, and the percentage of those people who eventually purchase following cross-device/browser experiences, you have an idea of:

- How many people may receive a “mixed experience”

- What the success rate for people with cross-device/browser experiences are (without adding mixed experience to the mix, pun intended)

So now (finally) you have pretty much a good view into cross-device usage on your site.

Leverage Analytics

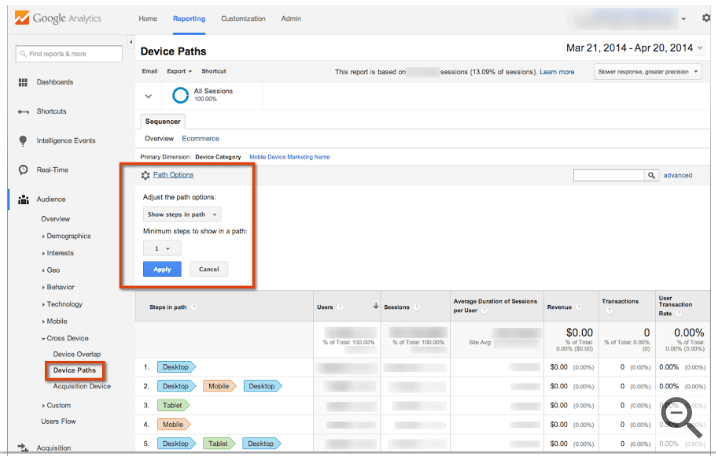

By now, you are likely using Google Analytics, which allows you to track users across devices if the user is logged in. The report you want is called the Device Paths Report and will provide you with the previous devices used up until a conversion. This report does not come out of the box (yes, it will take work to set it up, and time to collect the data, but it will be worth it).

While this will only track a dedicated segment of users, it will give you an idea of how these people’s experience with your eCommerce site traverses across devices, leading you to insights about your core group of online customers.

When to Change Your Testing

If your polls or Analytics tell you that there are more than 10 percent of people purchasing across devices, then you need to change the way you test. Yes, that is most people reading this. Sorry.

The fact is if you keep testing the way you are today, you can’t really know how a user (across devices and browsers) is responding to a variation because you don’t know if they are consistently getting that variation, or having a mixed experience. It’s a “cluster,” and one you need to change the way you test for.

How to Best Address the “Mixed Experience”

The good news is you can fix this and be one step closer to testing properly. (To get completely out of the woods, consider reading this.)

To effectively test across devices and browsers, you just have to think differently about testing. Instead of “tossing a coin” and letting a visitor into A (heads) or B (tails) variations, which is the way you likely do it today, why not divide your audience into two equal groups another way? Geographically.

Mixed Experience vs. Geo Targeted Testing

With a mixed experience, you are changing site treatments on users as they change devices and browsers, and this is happening to such a degree that one could argue that one should not have any faith in the test results. Geo Testing, on the other hand, appears to lack the scientific prowess of algorithms, calculations, scientific theory, etc.

By making an argument for Geo Testing over traditional A/B testing, it may appear we are thumbing our noses at the entire testing industry… So when I say this, please remember this is only the perspective of someone who witnesses more than a thousand tests a year, and indeed, I AM THUMBING MY NOSE AT THE ENTIRE TESTING INDUSTRY.

I have all but lost faith in A/B testing as it is now done in most cases. Unless the cross-device and cross-browser realities are handled, running a “simple” A/B test on any site these days is suspect. Due to technological limits around cookie technology, we will just never know how much variance is added to the test by not handling it. Consider if the issue was just 1/10th of what it really is, the statistical calculations around confidence of a “test winner” would be completely shot for the majority of tests run.

So, while I know Geo Targeted Testing is not bullet proof from a statistical theoretical perspective either, it is often (not always, as covered above) the lesser of the two evils and will often help you arrive at a more accurate test result sooner.

Here’s the Downside to Geo Targeted Testing

- It takes setup, and your test tools don’t make it easy or even indicate it’s possible

- It may require a lot of analytics work

- It just might not be best for you (you are a marginal case)

Now That We Have That Out of the Way, Let Me Tell You How to Proceed

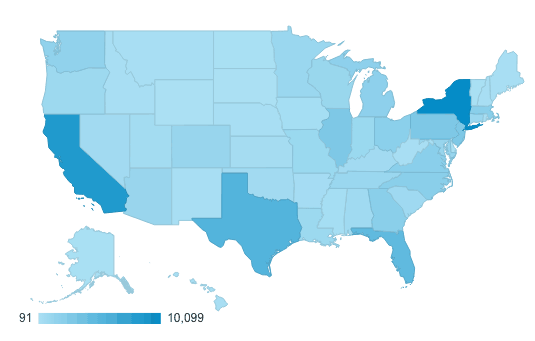

Go into Analytics and look at your user conversion rate and average order value (AOV) by state. There, you will want to go into the country you sell the most in (i.e., the U.S.).

But Texas is Not Like Minnesota

Yes, Texas has a lot more people, different weather and subtle differences in cultural norms, so you will need to do a bit of work to define, for an A/B test, a couple of regions that are comparable, meaning they have the same of the following:

- User conversion rate

- Average order value

- Other key metrics for you, such as days to purchase

Creating Test Regions

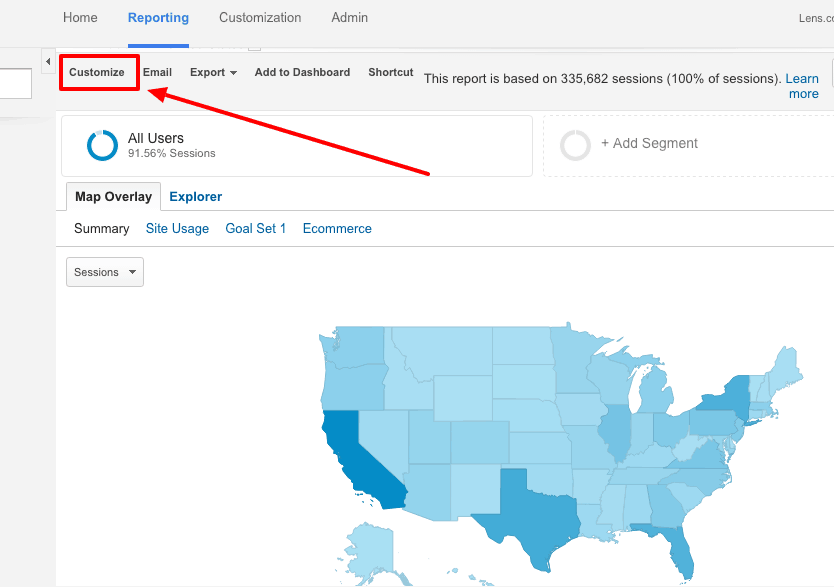

To get started, you will need to work with the Audience>Geo>Location report in Google Analytics to get at least two comparable regions. Take this common report and customize it to have AOV, which is a key component on most sites for getting “apples to apples” regions.

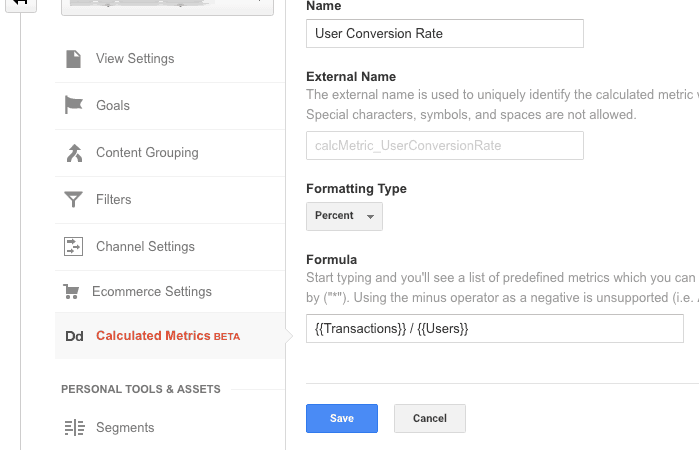

You want your regions to be the same for user conversion rate, which is a calculated metric you will have to set up in GA as shown below.

You will also want to make sure that revenue per visitor (RPV) and AOV are relatively similar, but don’t expect them to be perfect, as both of these metrics are subject to more variation. If you are judging your test off of conversion rate, you will just want to check RPV and AOV to make sure nothing is suspicious with their results.

Running the Test

Now that you have two similar regions set up, you are ready to use them. This means you will go into your test tool and assign the control to one region and the Variation to the other region (in the case of simple A/B tests).

Next, you will want to make sure you run your test properly.

Test Analysis

In analyzing your test, you look at it the way you look at any test, only the traffic for the variations will have been allocated by Geo region instead of by “coin flipping” logic that does not consider today’s cross-device and cross-browser realities.

In Summary

Yes, this is a new way to run and analyze a test. But considering half of the people getting this far reading the post have now likely switched devices or browsers, hopefully, you see the reason for it and recognize whether or not your site would benefit or not from testing this way.

We live in a world with constant device swapping, so testing across devices with cookies and/or IP technology no longer makes sense. While Geo Targeted Testing is not perfect, for many sites, it is a better way to test.

This is terrific. Do you have any recommendations on how I can implement geotargeted testing using Google Optimize? Or another testing platform that you would recommend to do this? Thanks.

Thanks for reading PA. This kind of test is a bit tricky to set up, which is why it is still very uncommon. Most test tools do not facilitate this out of the box – they are designed to randomize groups, not push people into pre-assigned groups based on your criteria, geo location included.

We are able to do this using custom JS code that we build for every test we run and our own Geo Location script we keep on our server. If you are not working with a testing company or are in a less sophisticated organization that doesn’t have resources to do this, you likely will have to hack together your results as outlined below.

Most AB testing tools do allow geo targeting for a split test (ie: only allow people from these locations into the test), they just don’t allow you to define the audiences for each individual version based on region. If you are using one of the standard tools like VWO or Optimizely you likely will have to set up 2 separate “tests” and set up your two groups as the geo targets for the 2 tests. You will then “test” only 1 version, meaning you’re basically using the tool to do a content serve to 2 different regions. With Google Optimize now doing some personalization as well, you might also be able to leverage that to serve the two different versions rather than setting up 2 tests.

In the end, regardless of whether you set up multiple “tests” or use personalization targeting, you’ll have to manually do a lift and confidence calculation to see how different the results are for your two regions instead of leveraging the built-in confidence calculations from your tool. There are plenty of these calculators out there for free, just do a quick search for “AB test confidence calculator”.

Hope this helps, and happy testing!