*This post was updated for accuracy in November 2020.

In most cases, no — your internal search results pages should not be indexed, whether by Google or any other search engines. In fact, indexing your internal search results can have harmful effects on not just your website but your user’s experience, as well.

Fortunately, there are ways to eliminate internal search results from indexation and prevent search-engine crawlers from wasting their time analyzing pages that won’t help your customers or business.

What is an Internal Site Search URL?

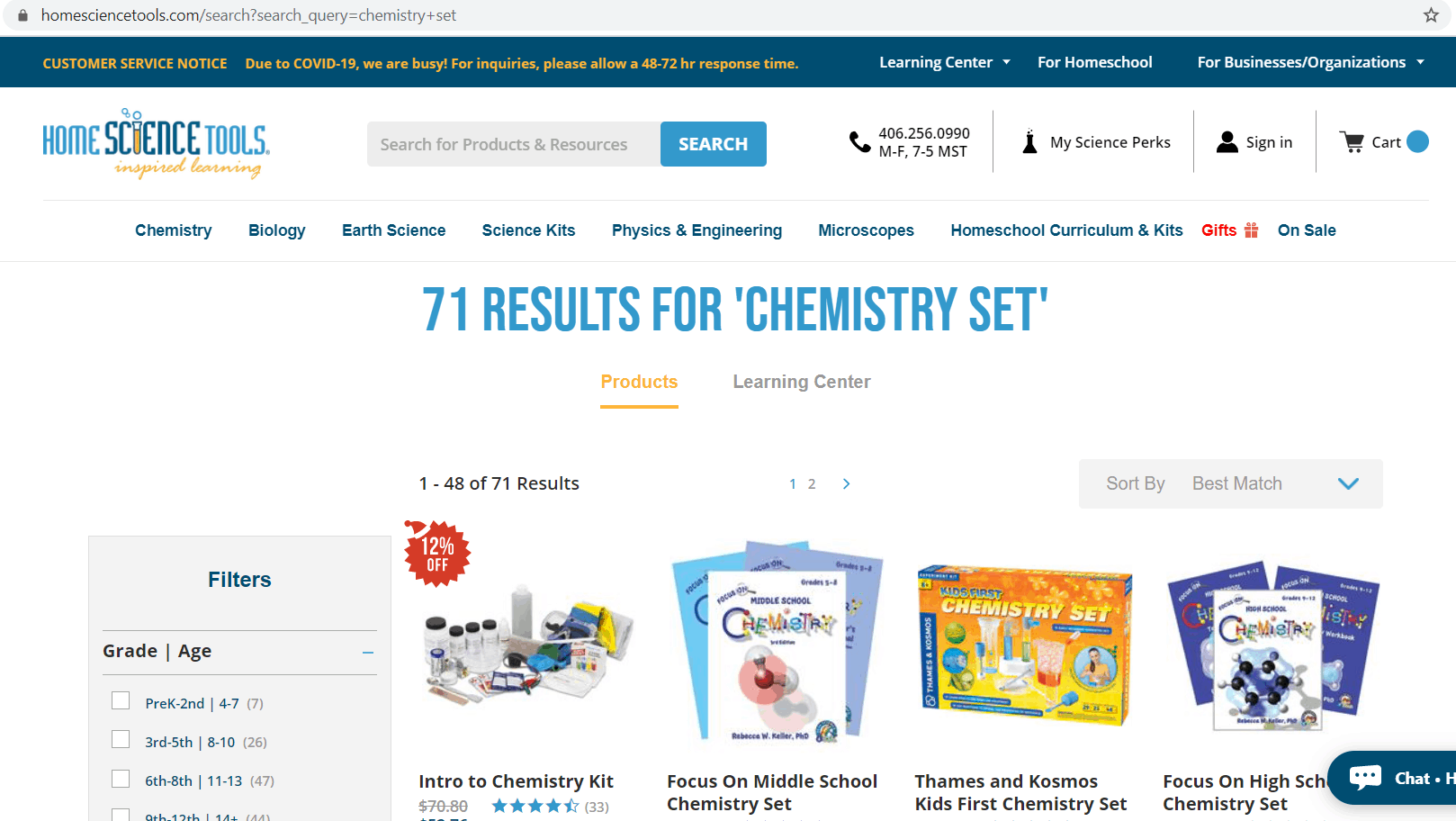

An internal site search URL is the page that results when a visitor to your site uses your internal search function. For example, a visitor on HomeScienceTools.com searching the site for a chemistry set lands on this page with relevant results. Check out the unique URL:

Almost every website has its own search bar function. It can help your users find the products they’re looking for within your website, without having to leave your site for a larger search engine like Google.

This functionality makes it easy for visitors to browse your site, and your business is more likely to benefit by keeping the user on the site.

What are Indexed Pages & Why Are They Important?

When a web page is indexed, it means it’s been crawled and analyzed by a web crawler like Googlebot or Bingbot and then added to its database of search results. Your site pages must be indexed before they can populate in a search engine’s results page. A website owner can request a page be indexed, or a page can be naturally discovered by a search engine bot through inbound or internal linking.

Indexed pages are important for several reasons, but one most of all: Only indexed pages can appear in organic search results and drive organic traffic to your site. When a search engine indexes your webpages, it determines your content is relevant and authoritative enough to bring value to users.

What Does Google Say About Indexing Site Search Results?

So, knowing the value of an indexed page, why shouldn’t search results pages be indexed?

Matt Cutts clearly explained Google’s stance on this exact topic in 2007 when he referenced the following from Google’s Quality Guidelines page:

“Use robots.txt to prevent crawling of search results pages or other auto-generated pages that don’t add much value for users coming from search engines.”

Going back to the 2017 guidelines, we see possible gray areas with regard to Automatically Generated Content and pages with Little or No Original Content.

John Mueller’s April 2018 Webmaster Central Hangout called attention to the fact that Google’s limited crawl budget for a website can be easily eaten by vast numbers of internal site search URLs, which, “from a crawling point of view…is probably not that optimal.”

Google’s guidelines on Doorway Pages also touch on the topic:

“Here are some examples of doorways:

- “Having multiple domain names or pages targeted at specific regions or cities that funnel users to one page

- “Pages generated to funnel visitors into the actual usable or relevant portion of your site(s)

- “Substantially similar pages that are closer to search results than a clearly defined, browseable hierarchy”

Many resources hint that it’s typically not ideal to have these pages indexed. There are exceptions for everything, however, so a closer look must be taken.

Reasons Not to Index Internal Search Pages

It can seem contradictory to eliminate internal search results from indexation when some may already be ranking high in search engine results. But there’s consequences behind the scenes that could impact your site’s performance if you continue to allow these internal site search URLs to be indexed.

1. Crawl Budget and Index Bloat

There’s a lot of internet out there for search engines to crawl. To keep things moving, search engine bots allocate a certain amount of crawl time (known as “crawl budget”) to each site.

While your site’s crawl budget can vary from day to day, it’s overall pretty stable. Your crawl budget is determined by factors including the size and health of your site.

To ensure your site’s best performance in organic search rankings, you need to optimize your existing crawl budget. That starts with determining which pages are important enough for a search engine to crawl and index. You don’t want them to waste their time on large amounts of pages that are thin and possibly contain duplicate content. This can lead to index bloat.

Index bloat occurs when Google indexes hundreds or thousands of low-quality types of pages that don’t serve your visitors with optimal content. You’ll notice index bloat when the number of indexed pages on your site increases sharply (find this by looking at the Coverage report in Google Search Console). These low-quality pages can slowly eat away at your overall site quality.

Deindexing your internal search result pages allows the crawl bots to focus on what’s really important — the quality content pages that you’ve enhanced and optimized for user readability and conversion.

2. User Experience

Website visitors want to find what they’re looking for as efficiently as possible. If a user lands on a search results page, instead of a page that’s been updated to include helpful content, they may be left with questions. Ideally the user would land on a page that’s relevant, helpful, and easy to navigate, rather than a page that shows a list of products.

That’s also assuming that the internal search works well enough to pull up relevant products in the first place. Again, there are certainly exceptions to this, but oftentimes websites have a page they’ve created that they would rather a user land on instead of an internal search URL.

How to Evaluate Your eCommerce Internal Search URLs for Deindexing

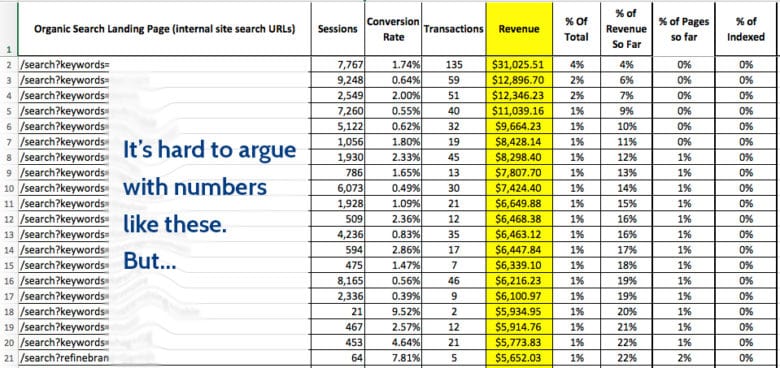

“But those pages make me money!” you may be thinking. Like many eCommerce sites, you may check analytics to find that those internal site search results pages account for a big chunk of revenue.

You’d be fired for blocking these URLs in the Robots.txt file, right?

We’ve heard this more than once, and most of the time further analysis uncovered a more nuanced truth. Take the following steps to decide whether or not you should really deindex these pages.

Calculate the Percentage of Internal Search URL Revenue

First of all, you must confirm what percentage of total revenue on the site is coming from people finding site search pages directly from search results. Here’s how:

- In Google Analytics, use last-click attribution (i.e. user did a search and clicked on your search result) to drill into Organic Search Traffic by Landing Page

- Apply a filter to see only internal site search URLs

- Note the total revenue coming from them. Is it even 1% of total revenue from organic search? 2%? If it’s any higher than that you may have a content quality problem on product pages and/or a crawlability problem on standard taxonomy/category pages.

Now that you know what percentage of revenue for the site as a whole consists of Organic Search Traffic landing on these internal site search URLs, you know the absolute worst-case scenario.

The thing is: Oftentimes in the case of blocking internal site search URLs, you’ll find organic search traffic and revenue will increase for many of the other pages on the site. Not only that, but they’ll probably convert better.

For Example:

You have 1,000 internal search results indexed (e.g. site:domain.com inurl:catalogsearch) = ~1,000

You have 100 internal site search result URLs receiving at least 1 Transaction as a Landing Page from Organic Search.

The other 900 indexed site search URLs either show 0 Transactions as a Landing Page from Organic Search or do not show up in Analytics at all.

Now calculate the percentage of indexed internal search result URLs compared to every URL indexed by Google (site:yourdomain.com). In this example we find that:

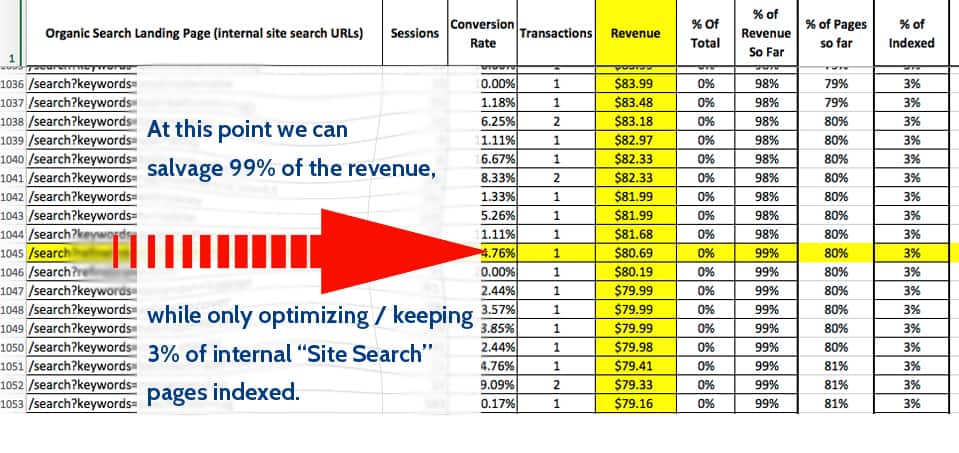

10% of Internal Site Search URLs account for 100% of the revenue from organic search into this page type.

Although the example is simple, the results are similar to what we find in the real world. Often it will end up being around 80%–99% of traffic and revenue going into 1%–20% of indexed internal search result pages.

This means you can scale a custom solution. You don’t have to lose that revenue!

Once you’ve got the data, you have options — deindex, leave indexed or leave a portion of the URLs indexed.

How to Deindex Internal Site Search URLs

There are technical SEO specifics regarding how to remove these pages from the index, which depend on other issues, like crawl budget and navigation paths to discover products.

For example, if you’re going to redirect some or leave them live, you’ll have to “Allow” them in the Robots.txt file, or at least wait until the redirects have been seen by Google before blocking them along with the others.

Here are the common methods for taking internal site search URLs out of the index:

- Noindex via Meta Robots.txt Tag: This first method is perhaps the simplest and most effective way to eliminate internal search results from indexation. Add a “noindex” meta tag to this page type. It looks like this: <meta name=“robots” content=“noindex” />

- Disallow: Add a disallow line in the robots.txt file to block the search engines from crawling the internal search pages.

- Redirect: 301 redirect (deindex) internal search pages getting organic traffic and revenue to “real” category pages within the site taxonomy, or to existing curated, optimized product grid pages. Then noindex the rest.

Based on your findings during research, you may also decide to keep them indexed in the unlikely event they’re getting more organic traffic, revenue and/or conversions than existing product or category pages. You may also land on a custom solution that keeps a handful of high-performing site search URLs indexed, while getting the rest out.

Now What?

Just because you’re getting traffic and revenue out of these low-quality pages that Google has advised webmasters not to index doesn’t mean you should leave them alone. Do the research and take your findings to the powers that be. Let them come to the same conclusion.

Running into trouble, or still not sure how you should be handling your site’s internal search URLs? Inflow’s here to help! Get in touch if this sounds like a project you’d like to explore.

Hi good afternoon

I’m having a similar problem on my blogger, when blocking internal search tracking by robots.txt, google sends me a message to fix this, because some search terms could not be crawled. How to do in this case?

We’d need some more information to answer your question. How is Google sending this message — in email, Google Search Console? What does the message say specifically?

Our best guess: You may be blocking crawlers in robots.txt but have the URLs submitted in an XML sitemap. However, without more details, we can’t provide any specific advice on what to do next.

Hi,

My site has a problem that I haven’t been able to solve for a long time. Pages are indexed but not indexed.Can you help me by browsing my Google Search Console account?

Have a nice day…

Alper, please contact our SEO team here if you are interested in an SEO audit. Thanks!