Crawlers are essential tools in the SEO and Inbound Marketing world; they’re used for a variety of important projects, from technical audits to comprehensive reviews of the content on a site. However, the complexity of crawls increases dramatically when you’re dealing with eCommerce sites, due to the sheer number of URLs, variety of page types, parameter settings and so on.

In this post, we’ll take a look at two popular crawlers, compare their relative merits and help you decide which one is best for you. We’ve dealt with each of these tools extensively, and have used them for a variety of projects.

With that in mind, let’s look at these two compelling options:

Screaming Frog: The Battle-Tested Veteran

For those of us in the SEO world, Screaming Frog has become an indispensable tool over the past several years. It’s affordable, intuitive, highly customizable and great at producing actionable crawl data. Analyzing that data, however, often takes some deeper digging and analysis.

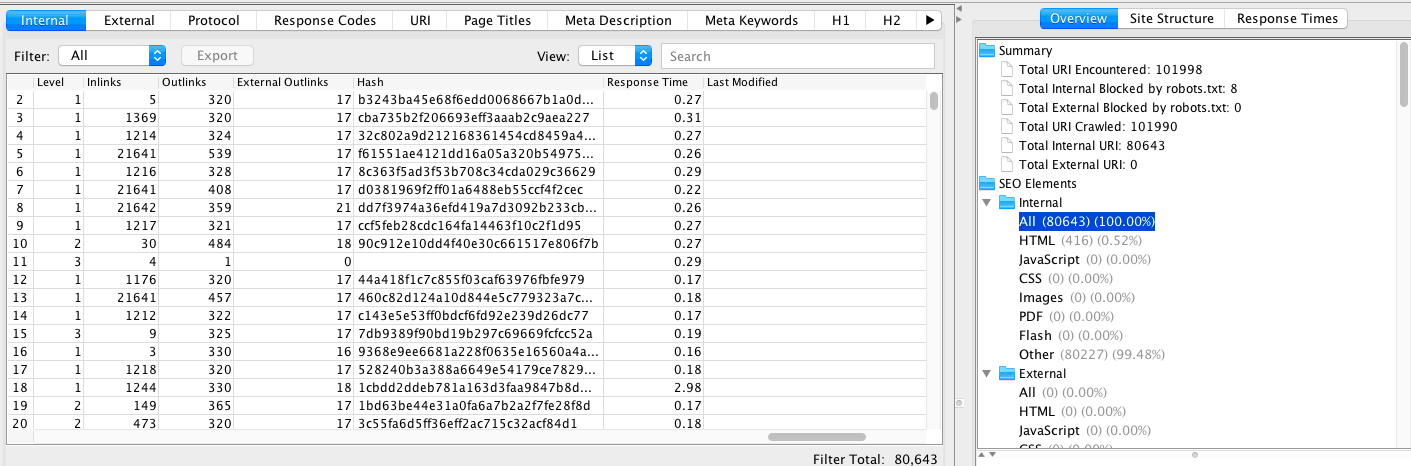

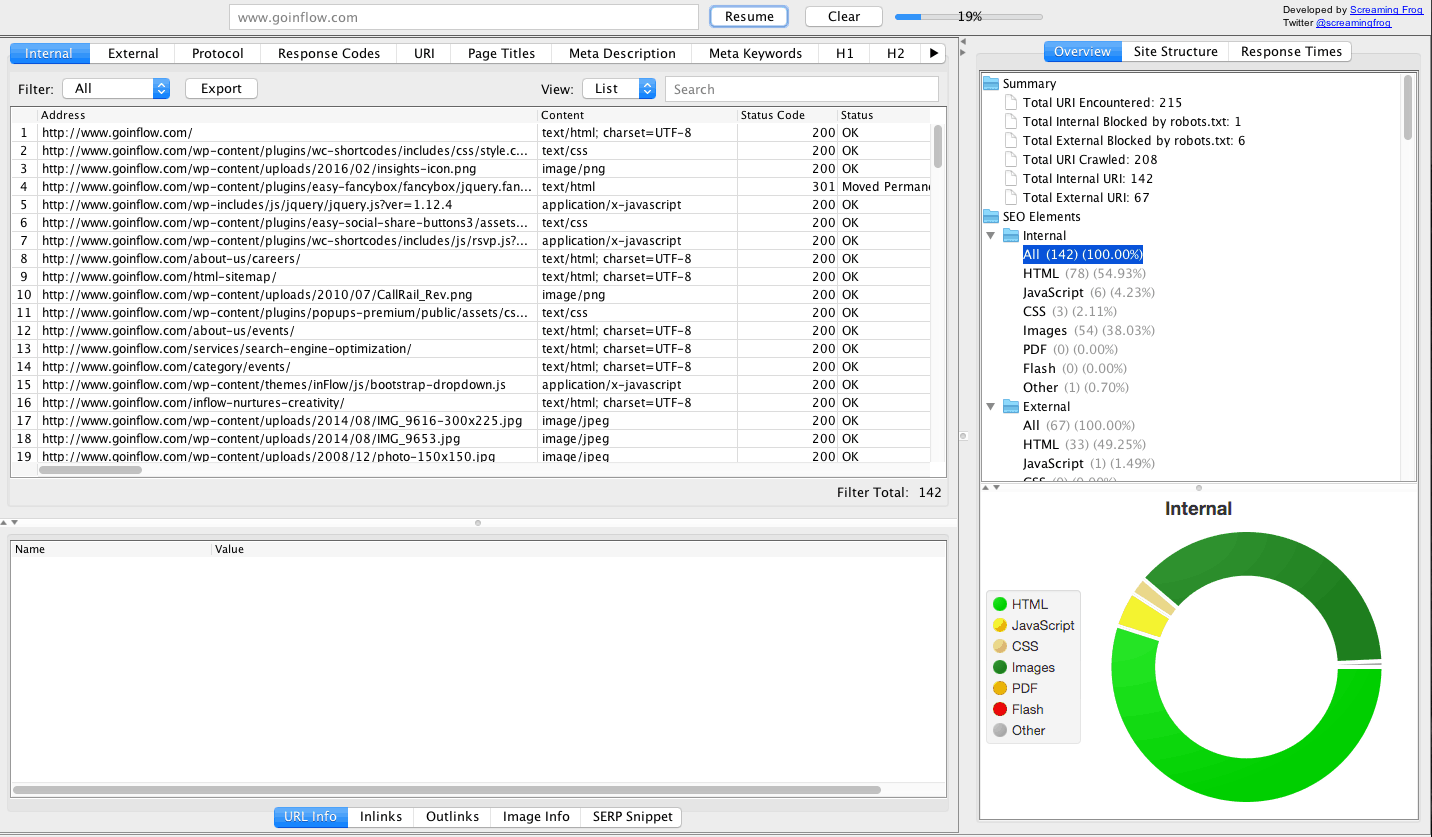

Unlike the other crawler discussed here, Screaming Frog is not an online tool; it runs on your machine and provides viewable data as it crawls and processes URLs. Simply enter a homepage URL or paste in a list of URLs, configure the settings to your specs, and let it roll.

Setup is a straightforward process. Options, such as crawling canonicals, noindexed pages or subdomains, can be turned on or off. Customizable filters make it easier to zero in on specific data, and sortable columns let you analyze the data as it’s gathered.

The tool can also be connected to Google Analytics, which is essential for combining user behavior metrics with data that’s discoverable by the crawler. This is useful for ensuring the crawl is proceeding correctly, and for quickly spotting potential issues.

Once the data collection is completed, it’s time to export to a spreadsheet and open the data in Excel. For smaller crawls, Google Sheets should work just fine. However, Sheets tends to slow down dramatically when you’re dealing with more than 10,000 rows. So, when in doubt, stick with Excel.

At this stage, you’re ready to start analyzing. It’s usually helpful to begin by filtering out any URLs you don’t need to analyze. For example, if you’re focusing specifically on indexable content, all those images, 404s and 301 redirects might not provide much insight.

Simple column sorting in Excel can quickly answer important questions, such as:

– Which pages have title tags or meta descriptions that are too long or too short?

– Which pages have a low word count, providing little value for search bots and users?

– Which pages have received no visits over the time period you’re analyzing?

– Are any pages erroneously noindexed?

– Which URLs are broken?

Screaming Frog is as deep as you need it to be. If you’re taking a more technical approach, there are a variety of separate data exports that help you hone in on issues, such as redirect chains and issues with internal linking.

For example, Screaming Frog’s “Canonical Errors” report shows you where improperly implemented code might be providing misleading or conflicting signals to search bots – for example, canonicalizing pages to 404s. In the report, you’ll be able to see, at a glance, where potential problems are lurking.

If you’ve recently performed a transition from HTTP to HTTPS, the “Insecure Content” report should prove especially useful, showing the source URLs of insecure pages and the HTTPS URLs they’re being delivered to.

There are some notable limitations with this tool. Because Screaming Frog runs locally, it can turn into a real memory hog with larger crawls – say, more than 30,000-50,000 URLs. A possible workaround is to crawl the site in batches, focusing on specific areas of the site, and then aggregating the data. However, this is a clunky and time-consuming approach, which makes Screaming Frog a less-than-ideal choice for large eCommerce sites.

There’s also a notable lack of visualization here; the data is mainly provided in spreadsheet form. So if you’re the visual type, you may prefer the following tool.

One of our favorite Screaming Frog features is the ability to render JavaScript, which makes doing SEO for sites built with JavaScript frameworks much easier. They also have a separate log file analysis tool. Learn more about log file analysis in this post.

DeepCrawl: The Elegant Cloud-Based Upstart

The first thing you need to know about DeepCrawl is that it’s a SaaS platform that lives entirely online. This means you’ll never have to be worried about encountering memory limitations or system slowdowns, even when you’re crawling hundreds of thousands of URLs. Data is saved and stored online, making it easy to access past reports. And crawls can also be scheduled on a regular basis, which is useful for analyzing how a site is changing over time.

If you’re crawling a large eCommerce site, the freedom from memory bottlenecks is a rather large feature in DeepCrawl’s digital cap. However, the tool offers a second major benefit: a clean and highly visual user interface makes it easy to configure crawls and zero in on potential issues.

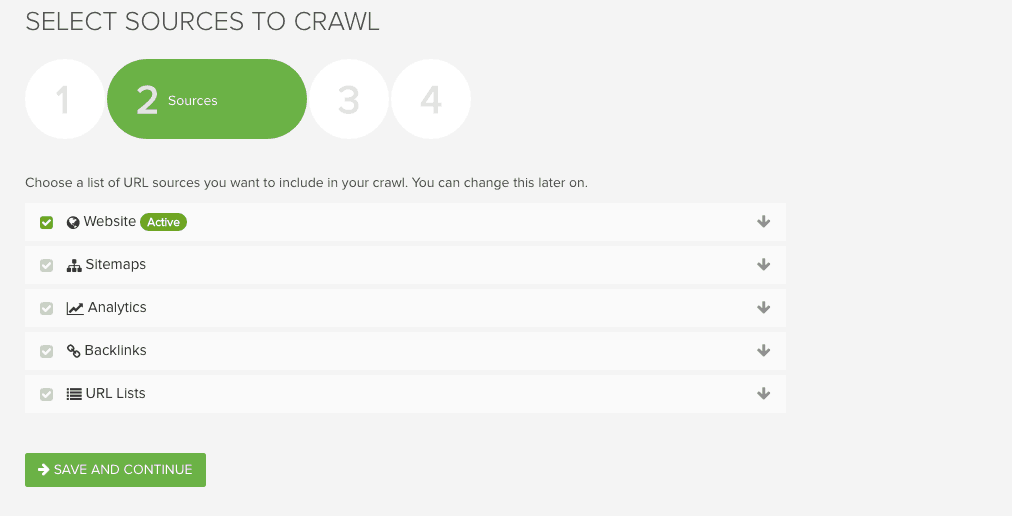

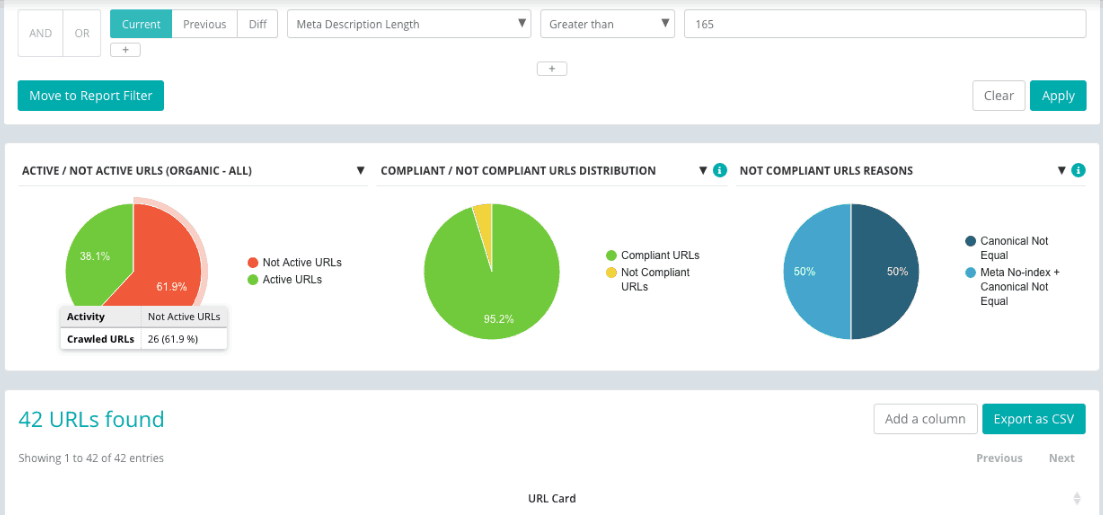

During this configuration process is when you encounter another standout feature of DeepCrawl: the fact you can include a variety of data sources.

One common issue with crawl tools is that pages can be missed. For example, an “orphaned” URL that wasn’t discoverable by a crawl tool might not be included in an analysis. Incorporating URLs from sitemaps, Google Analytics and lists of backlinks helps to create a more complete, comprehensive list of the site’s URLs.

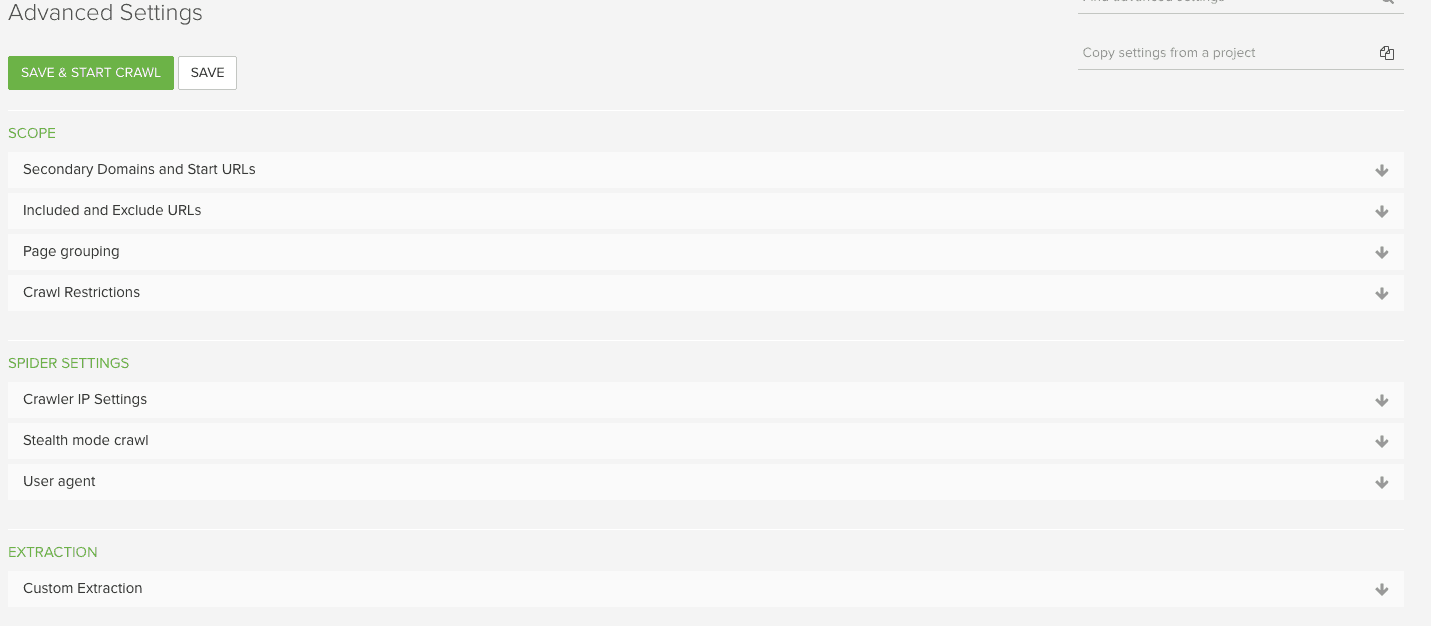

Further into the setup process, you can customize the crawl settings. These can help you optimize the use of credits (ensuring you don’t blow your monthly allotted URLs if something goes awry), and customizing other technical aspects of the crawl:

After the crawl completes, it’s analysis time. And it’s here that DeepCrawl really shines.

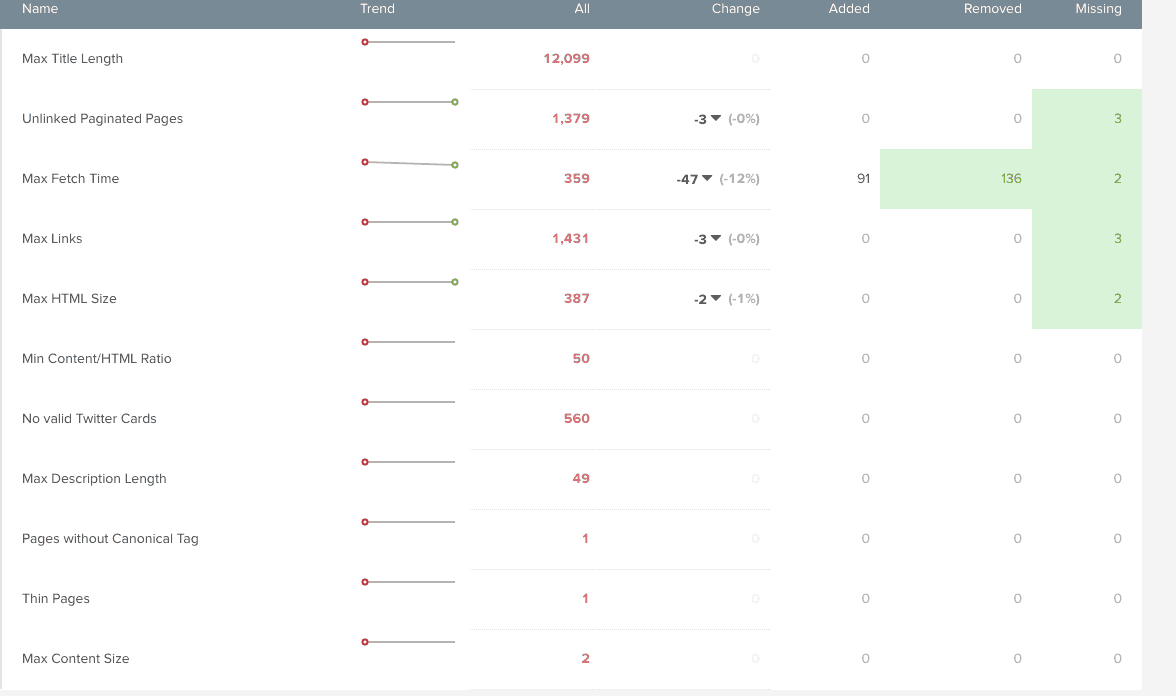

For starters, the tool provides a handy overview of its findings. This is helpful for quickly getting a good sense for the site’s size, duplicate content and other foundational aspects of the site. If you’ve run a crawl for the site previously, it also tracks results and compares them to the previous report.

The tool will automatically highlight a variety of potential issues, from meta descriptions that are too long, to pages with thin content. This is very helpful in seeing, at a glance, what might be holding a site page from its full organic ranking and traffic potential:

Drilling down further, clicking on a given issue will provide an exportable URL-by-URL list of where these issues exist. One added bonus is that DeepCrawl gives you the ability to assign tasks related to these issues, which is a convenient way to ensure these findings translate to real action that improves your site.

DeepCrawl is also no slouch when it comes to getting more technical. Separate features allow you to hone in on pagination issues, URLs that disallow JS and CSS, and redirect chains.

So what’s not to like about DeepCrawl? Well, the spreadsheet-focused analysis that lends itself so well to Screaming Frog is a little chunkier with this tool. For starters, you’re presented with around 130 columns of data upon downloading a full URL report. That’s a lot to sort through. Similarly, while DeepCrawl makes it easy to focus on a specific type of issue, it’s not quite as intuitive when it comes to focusing on aggregating a variety of issues into one cohesive analysis.

Other options

Botify is another web-based crawler worth exploring. In many respects, it’s similar to DeepCrawl; it has an easy-to-use interface, provides a lot of graphical representations of its findings and can be scheduled for regular crawls.

The UI is clear, concise and easy to understand. The reports it generates would be useful to provide for clients – especially the crawl overview. It takes care of the crawl/data collection process. If you know what you’re looking for, you can quickly spot issues. And – much like DeepCrawl – you can automate crawls on a regular basis in order to be alerted to new issues.

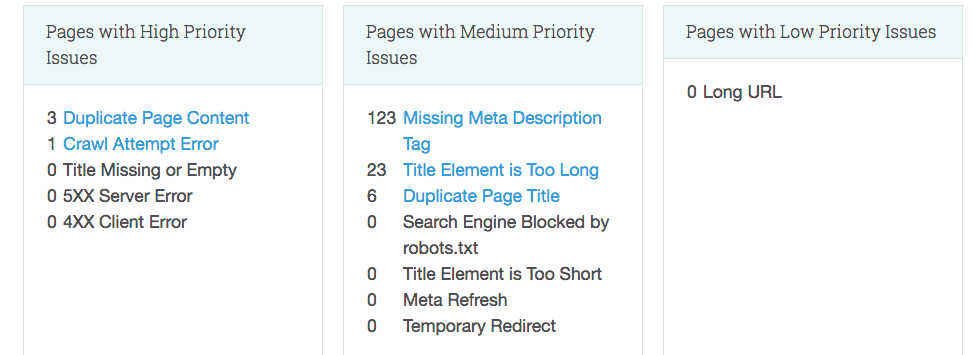

Moz Pro deserves a mention as well. Alongside other Moz benefits, a Pro account can uncover key insights, such as duplicate page titles and pages that are blocked by robots.txt:

Moz Pro pricing is scalable to your needs. Note, however, it lacks the depth, rigor, and technical detail offered by Screaming Frog and DeepCrawl.

Choose Your Weapon

So which crawler is right for you?

If you’re the type that likes to live in spreadsheets and really get down-and-dirty with your data, Screaming Frog should be perfectly serviceable. However, that pesky memory issue can be a real pain if you’re dealing with a larger site. There are ways to improve the efficiency of the crawler, however, such as installing it on a powerful server and adjusting memory settings in the tool.

If you’d prefer a more visual process – both in terms of setting up a crawl and analyzing the results – DeepCrawl will have more appeal. The ease with which you can spot problem areas can be a real time-saver. And if you’re looking to create a thorough, comprehensive report of every page within a site, its ability to include sitemaps and other URL sources is a big win.

Screaming Frog is also the way to go if you’re on a budget. At about $130/year, it’s a no-brainer if you want to gain occasional insights into factors that could impact your site’s SEO performance. There’s also a free version that might be sufficient to provide you with some basic insights, such as finding broken links, analyzing page titles and meta descriptions, and finding duplicate pages. Meanwhile, DeepCrawl can run several hundred dollars a month, depending on your needs.

For a best-of-both-worlds approach, consider investing in both tools. Screaming Frog could be employed for smaller sites, or for more surgical crawls aimed at answering very specific questions, such as “what pages have canonical errors?” Meanwhile, DeepCrawl could serve as your go-to tool for larger sites, or for quickly creating an actionable list of potential SEO issues.

0 Comments