Is it necessary to crawl an entire enterprise site?

TL;DR. Not usually.

Tell me if this sounds familiar. You’ve adjusted the settings on your crawler tool to throttle the crawl speed, respect robots.txt and robots meta tag directives, etc. You have saved the crawl and restarted it, rebooted your machine, verified with IT that your user-agent isn’t being throttled, performed something similar to a rain-dance to please the crawl gods, used a proxy, done a shot to calm the nerves…

And you just can’t get the &*d D#m! thing to finish crawling the site so you can start your audit!

So you begin to ask yourself, “Do I really need to crawl the entire site, or do I have the data I need already?”

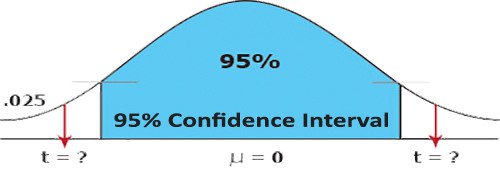

In statistics, there is the idea of a reaching a level of “statistical confidence” in which, after a certain point, you aren’t gaining very much additional certainty by increasing the sample size (e.g. polling more people). The same is true when it comes to major technical issues on a website, such as indexable internal search results and account access pages, or endless faceted navigation URLs.

Even if the problem isn’t technical but one of scale, at a certain point, the law of diminishing returns comes into play. For example, let’s use a site with millions of unique product pages. It is likely that not all of them should be indexed, in which case, those patterns will show up within the first thousand pages crawled. Outliers can be caught in round two, once you’ve fixed the 1 percent of issues resulting in 80 percent of the superfluous URLs – or whatever the ratio happens to be.

Assuming the site really does have millions of unique product pages that should be indexed (again, that’s doubtful), you still wouldn’t need to crawl every one of them. The top product pages tend to be linked to the most often and are higher up in sequence on category pages. This means they are among the first product pages to be crawled. After a certain point, every new product page URL being picked up is going to be about 99 percent likely to have no external links, no sales or traffic from organic search, no rankings to speak of, no social shares…etc. You don’t need to look at them each individually. You just need to fix the problem that is causing them in the first place, which should be easy to identify after a certain point.

So What Does the Process Look Like?

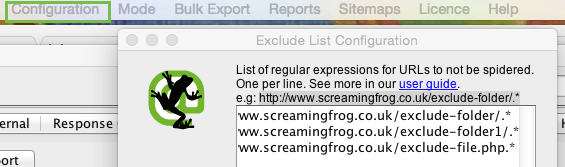

Diagnose the Big Problem (URL Bloat): When you find yourself waiting too long for a Screaming Frog crawl to finish, pause the crawl, save it and dig around the different Screaming Frog tabs looking for big issues that can be addressed with simple code changes, like the addition of a robots noindex meta tag.

Record and Recommend: Record those issues and your recommendations for fixing them somewhere. It could be Evernote, email, a text file or a deliverable document. Then go into Configuration —> Exclude and write the expressions needed to keep those pages from being crawled. In other words, assume for the sake of saving time, that the issue will be fixed upon implementation of your recommendations and therefore you have no reason to crawl the rest of them.

Recrawl: Either start from scratch or continue on with your crawl, but now limiting the URLs to ones you need to analyze.

0 Comments