Increasing the speed of your website can improve search engine rankings, attract more traffic, lower bounce rates and lift conversion rates.

However, making an eCommerce website faster is easier said than done. Dynamic areas like Cart totals in the upper-right-hand corner can present caching problems. Many third-party JavaScripts are used, most of which are considered render-blocking resources that eCommerce SEOs have little or no control over. Often speed is limited by the eCommerce platform itself, especially when using a hosted solution.

The more that SEOs understand the fundamentals of site speed, the more they’ll be able to win over developers, designers, and SaaS vendors in order to implement real solutions like these. So how do you improve page speed?

First Things First

When it comes to increasing page speed, the order of importance is:

- Optimize the critical rendering path

- Enable compression for images

- Implement conditional loading techniques

Back in 2010, Google announced that speed was a ranking factor in its algorithm. In addition, many have found that, regardless of search engine rankings, users are increasingly demanding of website load times with incrementally larger abandonment rates for slower websites. Furthermore, Google moving to a mobile-first index is bound to put more emphasis on page load times. Speed on both desktop and mobile devices is becoming ever more important. Below are several speed issues and recommended solutions to consider.

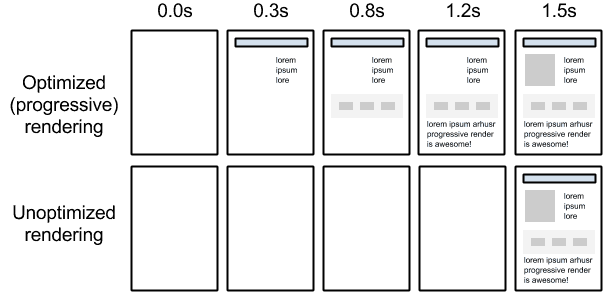

The Critical Rendering Path and Impact on Load Time

Critical rendering path (CRP) optimization dictates when and how elements of a web page are loaded. According to Google:

“Optimizing for performance is all about understanding what happens in these intermediate steps between receiving the HTML, CSS, and JavaScript bytes and the required processing to turn them into rendered pixels – that’s the critical rendering path.”

Recognizing how browsers function and being smart about the sequence in which elements are fetched, loaded and rendered can lead to massive increases in site speed without changing much from a content perspective. Using waterfall charts like the one available with Chrome DevTools, will help you determine what to load, and when:

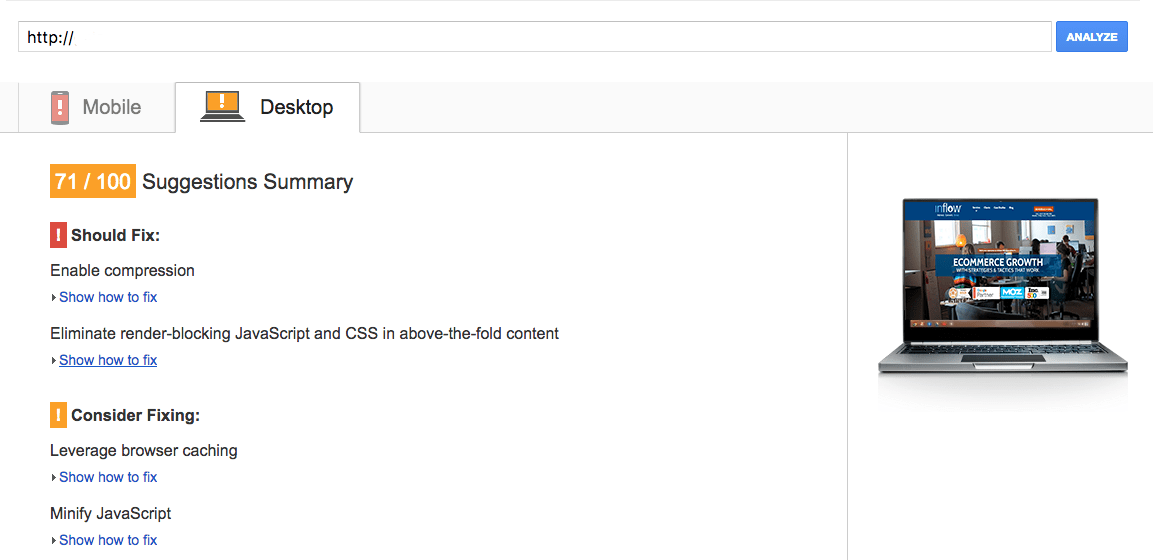

We most frequently come across this when addressing Pagespeed with Google’s Pagespeed Insights tool, where the analysis of a page will return recommendations such as the following:

Most of these fixes deal with optimizing the CRP of a website. These are discussed in more detail below.

Rel: Prerender, Prefetch, Preconnect and Preresolve

Read a fantastic summary of “the Pre Party” for SEOs by Mike King here. Essentially, the concept is that if you know how “most” users tend to use and navigate the site after landing on key pages, you can instruct the browser to prepare for those actions using these tags.

Minify, Compress, Cache

These three techniques mentioned above are relatively straightforward:

- Minify: You can start by making code as clean as possible by eliminating any superfluous lines. There are many tools to assist in minifying JavaScript, which can reduce the size of those JS files, or in-line JS by up to 80 percent. There are similar tools for minifying CSS. Minifier.org does both.

- Compress: Make the file sizes that are being fetched from the server as small as possible. Minification of code helps, but you can see major improvements by resizing and compressing images. If you’re not using Gzip compression yet, this guide on Varvy has setup instructions for various types of web servers. More info on image compression can be found below.

- Cache: Allow a browser to remember previously loaded elements of a page when a user has visited in the past, so they don’t have to be reloaded from the server. It is important to return the right header responses and HTML headers to instruct the user agent on how to cache each resource. For most websites, the longer the “Max-Age” is set in the “Cache-Control” header the better. A good CDN will handle this well.

Minimize Use of Render/Parser Blocking Resources

It’s first useful to think of different parts of website code as having different functions: Google explains:

“Before the browser can render the page it needs to construct the DOM and CSSOM trees. As a result, we need to ensure that we deliver both the HTML and CSS to the browser as quickly as possible.”

When a browser lands on most pages, the first file it is told to load is the HTML file – as it is scanning down the page, it is building the Document Object Model (DOM – the content and basic structural elements) in the background based off this HTML code.

But the DOM can’t be rendered onto a viewable page if there are manipulations in the stylistic elements of the page using CSS or JavaScript – if the page did render before these elements were loaded, users would briefly see an ugly page before the browser would hit the CSS and JS files and the page would quickly flash up the new version to display the same content in a more design-focused way.

Therefore, as the browser is building the DOM, again using mostly the HTML code, it is forced to stop loading and not render anything on the page whenever it hits a CSS or JS file. The DOM will pause while the CSS Object Model (CSSOM – manipulating content found in the DOM with new stylistic elements) is constructed. Once both are complete the page will be rendered.

This is where render blocking resources stem from – CSS and JS files that need to be in place for the page to render correctly, but are either not necessary to be in place before the DOM is finished being constructed, or are coded in a way that does not allow the DOM to continue construction while the CSSOM is being built.

There are generally two ways to deal with render blocking CSS:

- Inline CSS code: Putting the CSS code directly into an HTML file instead of forcing the browser to load an external CSS file from the server. Generally speaking though, you want to avoid in-line CSS because it makes changing the look of a site more difficult. Read more about the pros and cons here.

- Unblock render blocking CSS files using mediaqueries: Adding code to a CSS file line in the HTML to alert the browser to the purpose of a CSS file. For example, using a print media query with a CSS file tells the browser that a particular CSS file is only necessary to load when the user is attempting to print a page. This means that the DOM will not be blocked to load the CSS file when a user is doing anything other than printing and the page-loading process will not be paused.

There are three common ways to deal with render-blocking JavaScript, all of which should be used at various times depending on the situation:

- Inline JavaScript: In general, this has many of the same issues as in-line CSS

- Use the onload technique: Using an onload function tells the browser that a specific piece of JavaScript will not change the design or functionality of a page, and does not need to be loaded until after the page is rendered for a user. An example of this would be JS based analytics tags

- Add the async attribute to a JavaScript tag: The async attribute tells the browser that it is OK for the DOM to continue with construction, while, simultaneously, loading the piece of JavaScript. If the JavaScript finishes loading before the DOM construction finishes, the page will render as normal. If the DOM construction finishes before the JS finishes loading, the rendering process will then be paused to wait for the rest of the JS file to load

Are there any risks or drawbacks to implementing CRP optimization?

Really very few. It makes the construction of a website slightly more complex as developers need to evaluate which files are important enough to pause DOM construction for.

And how important is it?

High priority when you are considering improving page speed. CRP optimization should be considered for all websites, as it is vital for increasing page speed. This should be built in as standard to any development teams. Check in with your development team to make sure everyone is on the same page regarding CRP.

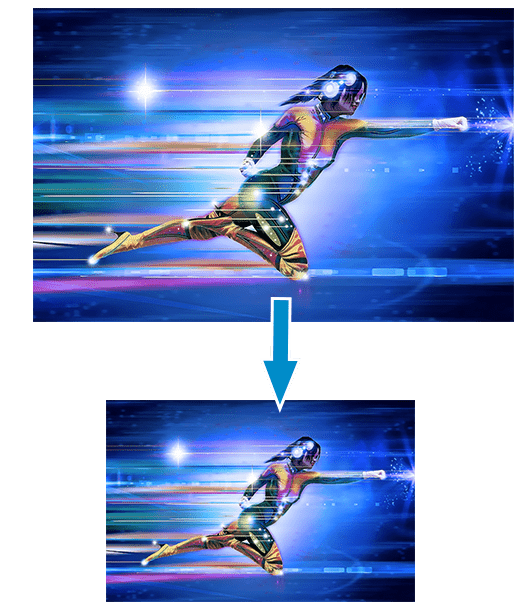

More on Image Compression

According to research done by HTTP Archive, Image files account for 60-65 percent of all bytes loaded on most web pages, leading to an increase in page size and decrease in page speed times. This becomes especially noticeable on mobile devices.

There are numerous ways to reduce image file size, but the ones below address them specifically: Kraken.io and WebP.

Kraken.io

Kraken is a third-party platform that specializes in reducing image size while retaining quality.

Traditionally, reducing image file sizes leads to a significant amount of quality degradation, however, Kraken has gone through great pains to develop an easy-to-use, one-stop solution to compress images without sacrificing much, if any, image quality.

Kraken.io compression mainly relies on lossy technology, though lossless compression is also an option. Often viewed inferior to lossless compression by image purists (as lossless only strips out unnecessary information without touching the image itself), lossy compression replaces some pixel information with alternatives that are less burdensome to load – this could be done by replacing colors with similar ones, etc.

In the past, lossy compression has had a noticeable impact on image quality, however, Kraken seems to have figured out the sweet spot where file compressions are huge and image quality issues are few and far between.

To use Kraken.io, an account must be created and paid for, the API must be plugged in (including all of the associated steps to enable this), and images need to be sent to Kraken for optimization. If using their hosting, the images will be automatically uploaded and then be pulled from Kraken.io servers whenever a page is loaded.

There are some great pros of Kraken.io:

- Image optimization done by Kraken.io, all that needs to done is uploading images to Kraken.io servers

- External storage on cloud-based servers to host images – reduces bandwidth and server stress

- API that easily plugs into most website suites and offers numerous different options, such as callbacks, bulk image optimization, etc.

- WordPress and Magento plugins, if your website is on one of these platforms

- The option to preserve original metadata from the image

- WebP support by setting a “webp”: true flag in your JSON

- Automatic image orientation for numerous device type

There are a few risks associated with Kraken.io:

- Instead of a monthly fee or price-per-image billing structure, pricing is based on data processed on a monthly basis

- As with any image compression, there will be some loss in quality, though it will rarely, if ever, happen and be noticeable

- When hosting images on Kraken’s servers speed will slow down slightly, as the browser has to leave the website to fetch the image. The decrease in server stress, decrease in image size and ease of use will probably outweigh the slight decrease in performance, but it is something to keep in mind

WebP

WebP was introduced by Google in 2010 and has been fine-tuned over the last six years. Google claims that WebP image files are about 30 percent smaller than their JPEG and PNG equivalents without sacrificing quality. This is done using a combination of lossless and lossy techniques and supports animation, as well as images while retaining the original metadata.

WebP both strips out unnecessary data with lossless compression while also segmenting an image into blocks and substituting colors to reduce the overall size of an image, similar to any other standard lossy compression. It also caches colors as it scans an image, so it does not have to reload specific colors for each pixel as it loads the image.

WebP appears to be a good compression option, especially for Google, but it comes with one large drawback.

WebP is not industry standard and is not supported by all browsers, as it is only supported in Chrome, Opera and Android, so another compression solution should be used in conjunction with WebP.

Because of this, we recommend using Kraken.io. (Note: There are other options out there not discussed here.)

How Important is this?

We’d dub it medium-high priority. As explained above, images are often the most burdensome files associated with website load times. The more they can be compressed, without losing quality, the faster the site will load and the better the user experience will be.

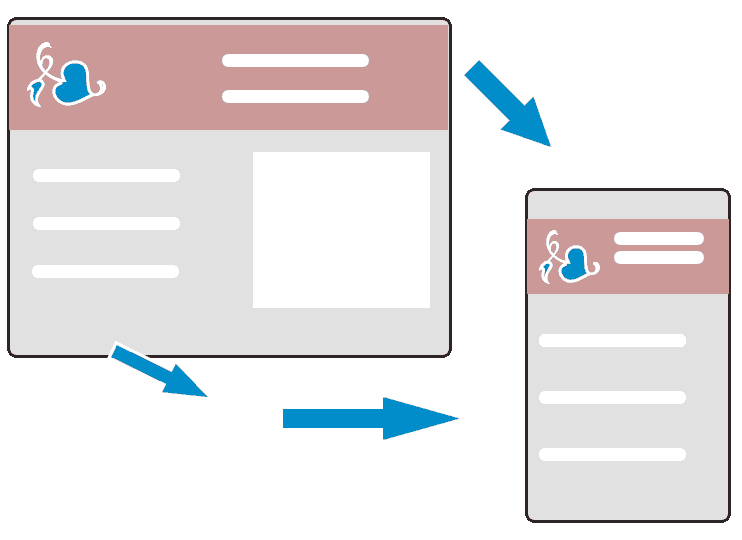

Conditional loading

Conditional loading stems from the evolution of mobile-first theory associated with responsive design.

Initially, a responsive design meant that developers and designers would take the desktop version of their site and squeeze it down to the size of a tablet. The mobile-first approach suggests that it is easier to scale up, rather than down, and so websites should be built with the most necessary building blocks in mind to deliver on the smallest screen and then extras can be added as the screen becomes larger.

This is where conditional loading plays a roll. If a website built for a mobile device was simply scaled up to fit on a desktop, the opposite problem occurs of the original responsive design problem: instead of having too much squeezed on a tiny screen – there is too little to fill a huge screen.

Conditional loading attempts to solve this problem by introducing new elements to a webpage if there is room enough to do so on the screen. This allows for desktop versions of a page to become much more interactive and offer more information while retaining the core information for mobile users.

Conditional loading for CSS and HTML elements is most often done with media queries, described above in the CRP section. Images are told to load with a media query, only if the viewport is above a certain size, is a certain orientation, etc.

This doesn’t just apply to visual aspects of the page, it can also be used for unseen resources that are unnecessary to load for mobile users, but also are helpful to have when desktop users visit the page. This could include Adsense tracking code, social information, etc.

This can be done for JavaScript using Window.matchMedia() within the normal JavaScript file in order to create rules about what JS to load based on the size of the screen. This allows the website to remain Google compliant by serving the same JavaScript for all users regardless of device.

Adding conditional loading to a responsive website that has been constructed using traditional desktop-down theory can be done, but truly implementing this will require a shift in mentality and increased stress on development resources.

Additionally, the Window.matchMedia(R) functionality does not work for IE 9 browsers and lower.

Read how we handle mobile at Inflow using responsive web design, adaptive JS logic and dynamic serving.

How important is this?

It’s medium priority level. Conditional loading will reduce load times, which the entire site will benefit from. But without a conscious change in attitudes about mobile, this is more of a nice-to-have.

The Overall Expected Impact on Your Site Speed

Since as far back as 2010, Google has claimed that speed is a factor in their ranking algorithm for desktop searches. It has recently been discovered that, for the first time, speed will boost rankings in the mobile index, rather than just harming them for slow sites.

Moz, along with many others, has repeatedly found a correlation between site speed and rankings.

iCrossing claims that consumers have increasingly high web performance expectations as expected load time was cut by half, from four to two seconds, in only three years.

Amazon has stated, “One second of latency was negatively affecting revenue by approximately 10 percent.”

Given all this, and depending on current site speed, a website could most likely benefit from implementing any, or all, of the above.

Excellent insights. The global eCommerce market is booming with statistics estimating a global sales value of over $6.5 trillion by 2023 at an annual growth averaging around 20 percent.

Statistics from Google indicate that 50% of website visitors expect a mobile website to load within 2 seconds. 53% of users will probably leave the blog page if it takes longer than 3 seconds to load.

Looking at all these stats. Your article is a ray of light. Thanks for sharing.