So all of the sudden you’ve got a site that’s suffered an unexpected drop in the rankings and your organic traffic is in the tank. What do you do?

Whether you manage clients or work in-house, it is likely there are people that can access your sites and make changes without checking with you first. These people often don’t think about SEO when they are making changes — and when something goes wrong, it’s helpful to have a checklist that starts with the most obvious things first.

So, with that in mind, here’s a checklist that can save you time and headaches in the future.

Step 1: Check the Obvious

There is a reason calling tech support means running through the usual list of items before directing it to someone who knows more about the problem. Sometimes it’s just not plugged in.

Before spending hours diving into technical SEO issues and wondering if a sketchy link got you bitten by Penguin, check the obvious.

The other day, I had a client ask me to look into why traffic had dropped, and my first thought was to investigate the link profile. Then I remembered the checklist below. By starting there, I noticed the noindex, nofollow tag implemented on a sitewide basis. Whoops! Finding that saved me from wasting time looking into other potential causes.

The Obvious Checklist

The Obvious Checklist

- Check robots.txt file

Is the whole site or major sections of the site blocked? - Check for noindex meta tag

View source code for the homepage and a couple main pages to see if the noindex tag has been applied sitewide. - Check for noindex via X-Robots-Tag in the HTTP header

- Check canonical tags

See if there is a canonical tag referencing the wrong version of the page or resulting in what would appear to be an infinite loop (e.g., https://domain.com canonicalizes to www.domain.com, while www.domian.com is 301 redirected to https://domain.com). - Check the Analytics Code

Has someone inadvertently removed the tracking code from some or all pages? Use Screaming Frog to check Analytics. Perhaps something else isn’t working? In which case, you could do a quick GA audit. - Check Google Search Console (GSC)

Look for any error messages, an unusual spike in crawl errors, malware, hacking, unnatural link messages, etc.

If it isn’t easy to find (it has gas in it), you want to gather more data and look at whether or not you’ve been hit by a Panda or a Penguin update.

Step 2: Gather More Data and Check for Correlations with Google Algorithm Updates

Panda and Penguin represent the bulk of issues related to algorithm updates, so starting there may save you some time. Here are some questions to answer:

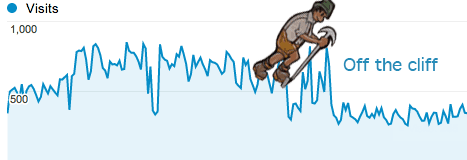

- When did the decrease start?

- Does it correlate with an algorithm update?

- Is the traffic decrease seasonal (check year-over-year data) or is it due to ranking drops?

- Is it a group of pages/keywords or is it sitewide?

- Compare top keywords or keyword groups pre-drop and post-drop to narrow down what has been affected.

- Was there successful PR or media mentions prior to the drop that temporarily inflated traffic numbers?

- Is the drop just Google or across all search engines (compare post-decrease to pre-decrease and view by search engine)?

There are many posts that do a great job of discussing these impacts, such as this and this. Things may get a bit more complex at this point, but if you’ve identified an algorithmic correlation, you’re one step closer to implementing a fix.

If at this point you’ve ruled out the obvious, and it’s not an algorithm update, now’s the time to dig deeper and find the culprit of the problem.

Step 3: Potential Causes (Other Than Penguin or Panda)

Has anything changed on the site around the time of the decrease that could have had an impact? Review and document changes, and then determine if that’s the cause, such as changes to:

- Navigation

- Page redirects

- On-page copy

- Title and H1 tags

- Site architecture

- Internal linking

- The number of published URLs

- Site speed

These changes can have major impacts on performance. For example, if you reorganized your navigation and everything only moved two more clicks away from the homepage, that can have a negative impact on the way link equity is shared. In certain cases, Google may not even crawl certain pages it previously found.

Step 4: Tools for Further Analysis

In addition to getting those answers, it’s a good idea to leverage these tools:

- Dig deeper into GSC to find any errors or issues (indexation bloat, sitemap problems, etc.).

- Look at the campaign in Moz for new errors.

- Look at historical Moz data (campaign>competitive analysis>history) to see if their Moz metrics decreased over time and if it correlates to the drop.

- Do a site:domain search and see if anything looks off.

- Run the site through https://www.siteliner.com; are there major internal duplicate content issues?

- Run the site through https://www.copyscape.com; are there major external duplicate content issues?

- Use Panguin, in tandem with your Google Analytics account, to get a more granular sense of what algorithmic updates may have caused your decline. Remember that there is more than Penguin and Panda at work in the SEO world; Google’s other updates relate to issues, such as mobile usability and local optimization, and can also trigger traffic and ranking declines.

Step 5: Develop a Plan to Fix the Problem

Based on the analysis above, research best practices to address the issues and prioritize your recommendations. The process looks a bit like this:

- Compile your data regarding the timeframe and severity of the traffic decline.

- Work up a step-by-step process for fixing the issues.

- Communicate everything to the client or your boss.

- Employ your plan for addressing the decline.

- Monitor results and make course adjustments as necessary.

Step 6: Lessons Learned

Once you’ve identified the problem and fixed it, use this as a learning opportunity. Let your client or team know what caused the issue, how you addressed it and how it can be avoided in the future.

If you’ve got anything to add to the checklist, please don’t hesitate to leave it in the comments below.

If it’s a recently redesigned/designed WordPress site that’s running WordPress SEO by Yoast that’s the victim of a sitewide no-indexing, chances are it’s because someone working on the site got tired of seeing:

“Huge SEO Issue: You’re blocking access to robots. You must go to your Reading Settings and uncheck the box for Search Engine Visibility. I know, don’t bug me.”

They misguidedly pressed the “don’t bug me” button and didn’t go back and change the status once the site was moved from development to production. I’ve seen it too many times! Getting the site re-indexed quickly after this is not fun, but can be done for critical pages using manual page submissions.

Love your checklist!

Excellent point Karie. Thanks for sharing!

In addition to dev mode, this has happened on my client sites randomly not around any redesign or launch. Probably a good follow up would be to take screenshots of different plugins and share with clients on what not to do, check or uncheck.

In “Check the Obvious” (Step 1) we should also look –

1. if someone had removed URL’s in webmaster tool.

2. Used URL parameter option of the webmaster tool incorrectly.

Good additions Ankit. Thanks!