Editor’s note: This post was originally published in 2018 and has been updated since to ensure accuracy and to adhere to modern best practices.

When you’re an SEO managing a website for years and publishing content consistently, there comes a point where things can seem out of control. You’ve published so many test pages, thank you pages, and articles that you’re not even sure which URLs are relevant anymore.

With every Google algorithm update comes a traffic spike for websites that focus on quality over quantity. To join that elite list, you need to make sure every URL on your website that’s crawled by search engines serves a purpose and is valuable to the user. In fact, if you have too many low-quality content pages on your site, Google may not even crawl every page on your site!

By allowing your website to grow in unnecessary size, you’re potentially leaving rankings on the table and wasting valuable crawl budget. Knowing every URL Google has in its index allows you to flag any potential technical errors on your website and clean up any low-quality pages — all to keep your website quality high.

In this article, we’ll cover how to identify index bloat by finding every URL indexed by Google and how to fix these issues to save your crawl budget.

If you’d rather have an expert do it for you, contact our team today to get started.

Table of Contents

- What is Index Bloat

- Identifying Index Bloat

- How to Find All Indexed Pages on Your Site

- Deciding Which Pages to Remove

- How to Remove URLs from Google’s Index

- Results from Fixing Index Bloat

Note: We can help you spot and fix issues on your website that are harming your overall ranking. Contact us here.

What is Index Bloat?

Index bloat is when your website has dozens, hundreds, or thousands of low-quality pages indexed by Google that don’t serve a purpose to potential visitors.

This causes search crawlers to spend too much time crawling through unnecessary pages on your site instead of focusing their efforts on pages that help your business. It also creates a poor user experience for your website visitors.

Index bloat is common on eCommerce sites with a large number of products, categories, and customer reviews. Technical issues can cause the site to be inundated with low-quality pages that are picked up by search engines.

In short, index bloat will slow down your site and waste crawl budget on your site. Keeping a clean website means search engines will only index the URLs you want visitors to find.

Index Bloat in Action: An Example

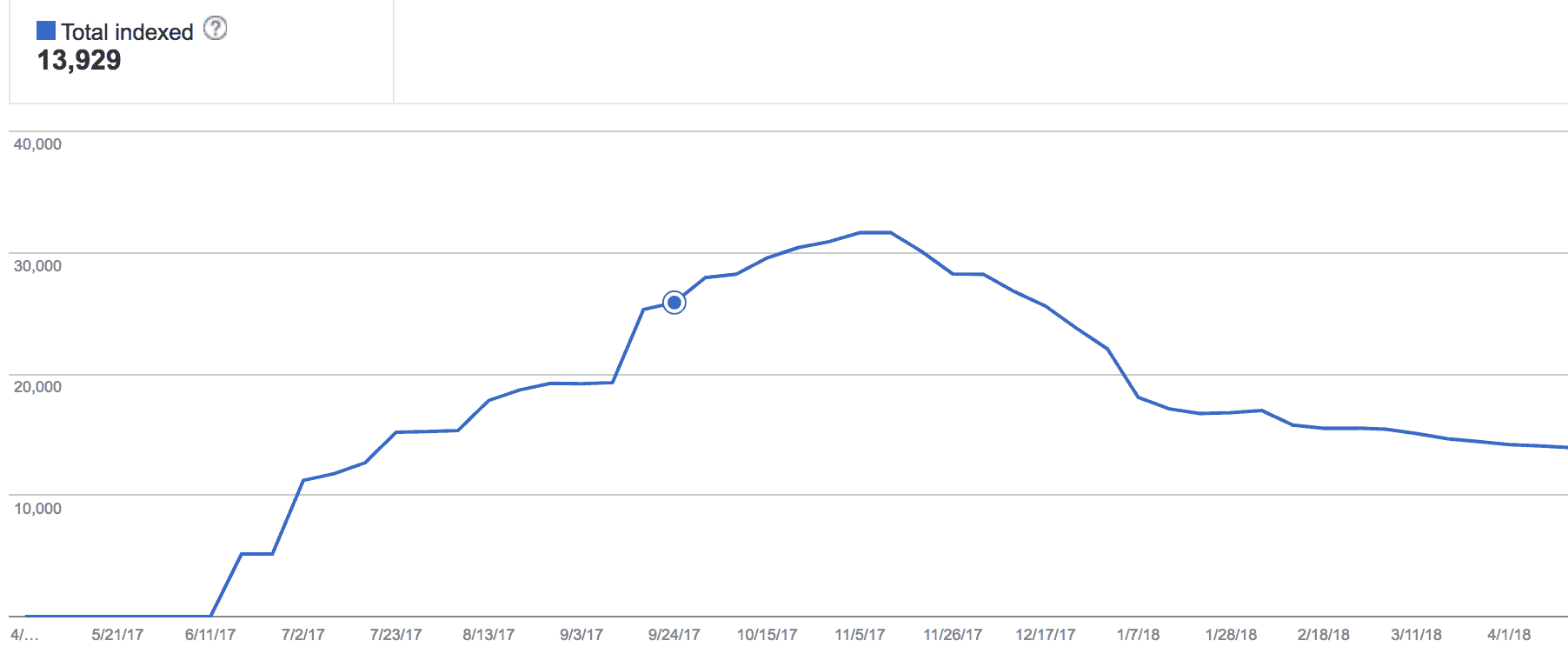

An eCommerce site that we worked with a few years ago was expected to have around 10,000 pages. But, when we looked in Google Search Console (GSC), we saw — to our surprise — that Google had indexed 38,000 pages for the website.

That was way too high for the size of the site.

(Hint: You can find these numbers for your site at “Search Console” > “Indexing” > “Pages.”)

That number had risen dramatically in a short period. Originally, the site had only 16,000 pages indexed in Google Analytics.

What was happening?

A problem with the site’s software was creating thousands of unnecessary product pages. At a high level, any time the website sold out of its inventory for a brand (which happened often), the site’s pagination system created hundreds of new pages. In turn, the glitch caused website indexation to increase drastically — and SEO performance to suffer.

Identifying Index Bloat

If you start noticing a sharp increase in the number of indexed pages on your site, it may be a sign that you’re dealing with an index bloat issue.

Even if the overall number of pages on your site isn’t going up, you might still be carrying unnecessary pages from months or years ago. These pages could be slowly chipping away at your relevancy scores as Google makes changes to its algorithm.

If you have too many low-quality pages in the index, Google could decide to ignore important pages on your site and instead waste time crawling these less-important other parts of your site, including:

- Archive pages

- Tag pages (on WordPress)

- Search results pages (mostly on eCommerce websites)

- Old press releases/event pages

- Demo/boilerplate content pages

- Thin landing pages (<50 words)

- Pages with a query string in the URL (tracking URLs)

- Images as pages

- Auto-generated user profiles

- Custom Post types

- Case study pages

- Thank You pages

- Individual testimonial pages

It’s a good idea to audit these pages periodically to ensure no index bloat issues are occurring or silently developing (more on that below).

How to Find All Indexed Pages on Your Website

When your site is crowded with URLs, identifying which ones are indexed can seem like finding the proverbial needle in the haystack.

Start by estimating the total number of indexed pages: Add the number of products you carry, the number of categories on your site, any blog posts, and any support pages.

You should also take a more granular approach to find all the pages on your website. Here are our suggestions:

- Create a URL list from your sitemap: Ideally, every URL you want to be indexed will be in your XML sitemap. This is your starting point for creating a valid list of URLs for your website. (Use this tool to create a list of URLs from your sitemap URL.)

- Download your published URLs from your CMS: If you use a WordPress CMS, consider trying a plugin like Export All URLs. Use this to download a CSV file of all published pages on your website.

- Run a site search query: Run a search query for your website (site:website.com) and make sure to replace website.com with your actual domain name. The SERPs will give you an estimated number of URLs in Google’s index. There are also tools available that can help scrape a URL list from search.

- Look at your Page Indexing Report in Google Search Console: This tool tells you how many valid pages are indexed by Google. Download the report as a CSV.

- Analyze your log files: Log files tell you which pages on your website are most visited, including those you didn’t know users or search engines were viewing. Access your log files directly from your hosting provider’s backend, or contact them and ask for the files. Conduct a log file analysis to reveal underperforming pages.

- Use Google Analytics 4: You’ll want a list of URLs that drove page views in the last year. Go to “Reports → Pages and Screens.” Changes your primary dimensions to “Page Path and Screen Class.” Export as a CSV.

Once you’ve consolidated all of your collected URLs and removed duplicates and URLs with parameters, you’ll have your final list of URLs.

Use a site crawling tool like Screaming Frog, connect it with Google Analytics, GSC, and Ahrefs, and start pulling traffic data, click data, and backlink data to analyze your website.

You can use the data to see which URLs on your website are underperforming — and, therefore, don’t deserve a spot on your site.

How to Decide Which Pages to Delete or Remove

Deleting content from your site may seem contrary to all SEO rules. For years, you’ve been told that adding fresh content on your site increases traffic and improves SEO.

But when you have too many pages on your website that don’t add value to your users, some of those pages are probably doing more harm than good.

Once you’ve identified those low-performing pages, you have four options:

- Keep the page “as-is” by adding internal linking and finding the right place for it on your website.

- Create a plan to optimize the page.

- Leave it unchanged because it’s specific to a campaign — but add a noindex tag.

- Delete the page, but set up a 301 redirect to it.

In most cases, the easiest and most efficient choice is the last one. If a blog post has been on your website for years, has no backlinks pointing to it, and no one ever visits it, your time is better spent removing that outdated content. That way, you can focus your energy on fresh content that’s optimized from the start, giving you a better shot at SEO success.

If you’re trying to decide which of the four paths above to take, we’ve got a few suggestions:

1. Use our free tool to find underperforming pages — and then delete them.

The Cruft Finder Tool is a free tool we created to identify poor-performing pages. It’s designed to help eCommerce site managers find and remove thin content pages that are harming their SEO performance.

The tool sends a Google query about your domain and — using a recipe of site quality parameters — returns page content it suspects might be harming your index ranking.

After you use the tool, mark any page that is identified by the Cruft Finder tool and gets very little traffic (as seen in Google Analytics). Consider removing those pages from your site.

The video below is hosted on YouTube. If you need assistance with viewing the video, please contact info@goinflow.com.

For a more in-depth review of your website content performance, you can also download our eCommerce Content Audit Tool.

2. Update any necessary pages getting little traffic.

If a URL has valuable content you want people to see — but it’s not getting any traffic — it’s time to restructure.

Ask yourself:

- Is it possible to consolidate pages with another page on a similar topic?

- Can the page be optimized to better focus on the topic?

- Could you promote the content better through internal links?

- Could you change your navigation to push traffic to that particular page?

Make sure that all your static pages have robust, unique content. If Google’s index includes thousands of pages on your site with sparse or similar content, it can lower your relevancy score.

3. Prevent internal search results pages from being indexed.

Not all pages on your site should be indexed. One major example: search results pages.

You almost never want search pages to be indexed; there are better pages to funnel traffic that have better quality content. Internal search results pages are not meant to be entry pages.

For example, using the Cruft Finder tool for one major retail site, we discovered they had more than 5,000 search pages indexed by Google!

If you find this issue on your own site, follow Google’s instructions to get rid of search result pages — but do so carefully. Pay attention to details about temporary versus permanent solutions, when to delete pages and when to use a noindex tag, and more.

If this gets too far into technical SEO for you, reach out to our team for consultation or advice.

How to Remove URLs from Google’s Index

You’ve done the hard part: You’ve identified which pages you want removed from Google’s index.

Now, to make it happen.

There are five simple ways to noindex pages on your website.

(If your eCommerce website has a lot of zombie pages on it, see our in-depth SEO guide for fixing thin and duplicate content.)

1. Use noindex in the meta robot tag.

We want to definitively tell search engines what to do with a page whenever possible. The noindex tag tells search engines like Google whether or not they should index the page.

This tag is better than blocking pages with robots.txt, and it’s easy to implement. Automation is also available for most CMS.

Add this tag at the top of the page’s HTML code:

<META NAME=”ROBOTS” CONTENT=”NOINDEX, FOLLOW”>

“Noindex, Follow” means search engine crawlers (like Googlebot) should not index the page, but they can crawl/follow any links on that page.

2. Set up the proper HTTP status code (2xx, 3xx, 4xx).

If old pages with thin content exist, remove and redirect them (through a 301 redirect) to relevant content on the site. This will maximize your site authority if any old pages had backlinks pointing to them.

It also helps to reduce 404s (if they exist) by redirecting removed pages to current, relevant pages on the site.

Set the HTTP status code to “410” if content is no longer needed or not relevant to the website’s existing pages. A 404 status code is also okay, but a 410 is faster to get a site out of a search engine’s index.

3. Set up proper canonical tags.

Adding a canonical tag in the header tells search engines which version they should index.

You should ensure that product variants (mostly set up using query strings or URL parameters) have a canonical tag pointing to the preferred product page. This will usually be the main product page, without query strings or parameters in the URL that filter to the different product variants.

4. Update the robots.txt file to “disallow.”

The robots.txt file tells search engines what pages they should and shouldn’t crawl. Adding the “disallow” directive within the file will stop Google from crawling zombie/thin pages, but still keep those pages in Google’s index.

The important technical SEO lesson here is that blocking is different from noindexing. In our experience, most websites end up blocking pages from robots.txt, which is not the right way to fix the index bloat issue.

Blocking these pages with a robots.txt file won’t remove these pages from Google’s index if the page is already in the index or if there are internal links from other pages on the website.

When pages are already indexed and might have internal links from other pages of the site, you can remove those internal links completely when the destination page is set to “Disallow.”

If your goal is to prevent your site from being indexed, add the “noindex” tag to your site’s header instead.

5. Use the URL Removals Tool in Google Search Console.

Adding the “noindex” directive might not be a quick fix, and Google might keep indexing the pages, which is why the URL Removals Tool can be handy at times.

That said, use this method as a temporary solution. When you use it, pages are removed from Google’s index quickly (usually within a few hours depending on the number of requests).

The Removals Tools is best if used together with noindex directive. Remember that removals you make are reversible in the future.

The video below is hosted on YouTube. If you need assistance with viewing the video, please contact info@goinflow.com.

Why It’s Important to Fix Index Bloat: Results That Can Follow

We’ll say it again: You only want search engines to focus on the important URLs on your website. Getting rid of those that are low-quality will make that easier.

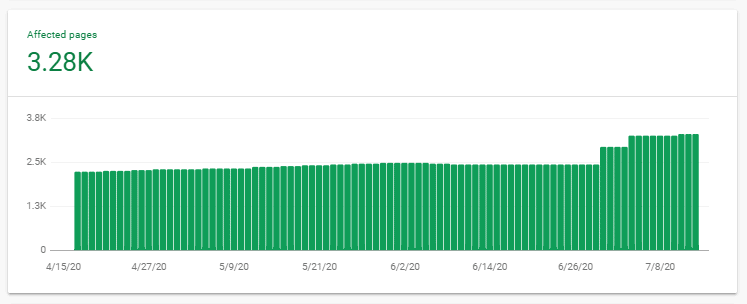

Here’s a graph of indexed pages from a client whose internal search result pages were being indexed. We helped them implement a technical fix to keep it from happening.

In the graph, the blue dot is when the fix was implemented. The number of indexed pages continued to rise for a bit, then dropped significantly.

Year over year, here’s what happened to the site’s organic traffic and revenue:

3 Months Before the Technical Fix

- 6% decrease in organic traffic

- 5% increase in organic revenue

3 Months After the Technical Fix

- 22% increase in organic traffic

- 7% increase in organic revenue

Before vs. After

- 28% total difference in organic traffic

- 2% total increase in organic revenue

This process takes time. For this client, it took three full months before the number of indexed pages returned to mid-13,000, where it should have been all along.

Conclusion

Your website needs to be a useful resource for search visitors. If you’ve been in business for a long time, you should perform site maintenance every year. Analyze your pages frequently, make sure they’re still relevant, and confirm Google isn’t indexing pages that you want hidden.

Knowing all of the pages indexed on your site can help you discover new opportunities to rank higher without needing to always publish new content.

In essence, regularly maintaining and updating a website is the best way to stay ahead of algorithm updates and keep growing your rankings.

Note: Interested in a personalized strategy for your business to reduce index bloat and raise your SEO performance? Our digital marketing experts can help. Request a free proposal here.

hi Chris, we have the opposite problem of what most of the comments talk about. Three pages on our website generate 25% of the total traffic, however they don’t bring any single lead or contribute to the revenue in no way. It’s just traffic with 80% bounce rate.

Shall we remove them you think?

Luca — Do not delete these pages; they’re very important! We recommend first ensuring the traffic is human, not bot. Then, look at the keyword phrases bringing that traffic to your site to understand what your users are expecting when they come to these pages (and create a hypothesis on why they’re bouncing). If the phrases match your business goals, address the page experience to entice them to stay on your site.

You can also create pages related to your products, services, or business overall — and then leverage these high-traffic pages to link internally and spread authority to the new pages. Over time, these newer business-focused pages should make up more of your traffic.

Hi Mike

Great article, thank you! I have identified thin content posts on my website going back 10 years. I use wordpress and I have switched the posts to draft. Is that a good idea, or not?

Many thanks, Julien

Hi Julien,

Setting the posts to drafts essentially makes them 404. That could be fine if they are thin posts as you say and not getting any traffic or links.

Many thanks for your reply Mike!

Hi Mike,

Great article. I really enjoyed reading this. Which brings me to a question…

If the website is showing offers. (In my case – cottages website). The business has seasonal and off-season prices. and each package has an individual page. For e.g.: Winter deals – 20% off.

Since it’s seasonal, does it mean the page should be unpublished when the season has passed or still keep it on the site all the time?

Than you!

Hi Karan,

Ideally you wouldn’t need two different pages for this. A single “evergreen” page where the content changes would probably be the best approach. If that won’t work, you’ll probably want to leave both pages published and indexable year round.

I have just removed lots of pages from the index which are no longer relevant (which I have deleted from the site, old category pages and paginated versions).

no other big changes have happened to the site in months.

However, since doing this, within a couple of weeks we have just had a 30% reduction in organic traffic. Is it possible that fewer pages in the index has triggered a threshold for an algorithmic penalty? for example, we always had spammy links arrive at our site (like everyone does), but never disavowed because there was never an impact on rankings.

Perhaps now we have fewer pages in the index, the spammy links make up a larger proportion in ratio to the pages in the index and therefore an algorithmic penalty has occurred?

Any help on this appreciated

Hi Robin,

I don’t think that is quite how it works re: spammy links making up a larger proportion. If you removed paginated pages from the index it is possible Google no longer has a click path to your products. In addition, if you deleted pages that had good links and or traffic that could also lead to a decline in traffic.

Hi Chris, You talk about low quality pages negatively affecting ranking. So is it the case that low quality pages with a very low page authority due to no traffic or backlinks and poor content negatively affects the overall domain authority? Would de-indexing low quality pages with a low PA help to increase the overall DA, therefore increasing ranking in google searches?

Hi Phil,

If a page has no traffic or backlinks AND it has poor content it is a good candidate for removal from the index. Doing this does not increase your DA, but it does help improve your overall site quality and crawl budget.

Also I have this weird problem: My site is authoritative enough. Whenever I write about a topic, the article will appear in the top50 at least. For any keyword. Even of the hardest two word keyword. However I have an article that doesn’t tank for it’s keyword. Which is really weird because content-wise it’s the highest quality post from all. It’s long enough. I simply cannot find the problem why my article doesn’t rank. The keyword difficulty is easy enough.

What is even weirder: another one of my pages ranks on #44 place. But that page doesn’t even have the keyword in it, only the company name. What do you think the problem could be? Should I completely rewrite the article?

Also there is no duplicate content or noindex or anything else. Page speed is also fine. Page is in sitemap and indexed in search console. I am only linking internally to the page that is not ranking.

Sorry for offtopic

I’d like to mention that my pages are all above 700 words long. I don’t think this counts as thin content.

Hello Chris. This is my first time on your blog. I was looking especially for this topic.

Here is my situation: I have an affiliate website with around 300 pages. I think at least 30% of those pages never received search engine traffic. I am thinking about deleting all of them or merging them with other pages.

What would you do in my case? In either way I plan to 301 redirect them. However I see you 404d the pages of your client. Why choose 404 instead of 301? I see only disadvantages doing 404.

I have a “deal blog” that was running 20-25 deals per day of very thin, time sensitive deals. It’s a wonder I rank for anything on Google, actually.

Of the 24k posts I am guessing that 23,500 need to be deleted.

Is there a tool that can help me make a list of which posts to delete that you know of?

I have a developer that can maybe even do it in one fellow swoop if I can tell him which ones i want gone.

Any ideas, greatly appreciated!

Hi Kate,

It sounds like what you are looking for is more of a content audit tool than a CRUFT finder tool. Your coupon pages we probably wouldn’t consider true CRUFT but if they weren’t getting traffic and have no incoming links they need to be pruned. I’d recommend conducting a site crawl that pulls in GA, GSC, and link data all into one spreadsheet. Then I would set a threshold for traffic and links and prune any page that is under that threshold. You can find our basic process outlined here: https://moz.com/blog/content-audit that should help.

Thanks!

Mike

Great post, exactly what I was looking for. I’m in the position where I followed that advice of writing a blog post every week or so for an e-commerce site and years later have lots of similar posts on the topic that aren’t great. So I’m just culling out all the pages that don’t get traffic or have external links. My question is should I stagger out removing the pages over a period of time or should I just ‘unpublish’ them all at once? Would be approx 60-70 pages out of 200 total. Thanks!

Hi Mike,

If the pages aren’t getting any traffic or conversions there shouldn’t be too much risk in pruning the pages at once.

Hope that helps!

Mike

thanks!

Does google penalize your website if you simply delete posts without deindexing first?

not at all. if you deleted posts without redirecting, meaning the user would receive a 404 error, that is totally normal and acceptable assuming it was on purpose.

My question is when I deleted my post search console show 404 technical issue. how I overcome this issue. can I disavow the all link and remove it. I don’t find any option. can you please answer this brodlye.

Your post was really helpful.

Hi Jemes,

It’s OK to have 404s showing in search console, this won’t raise any red flags. No need to disavow anything. You should, though, make sure the URLs returning a 404 weren’t receiving much traffic or have any inbound links. If they do, 301-redirect them to the closest related page.

Hi, this post is great and the comments have given me some actionable tasks to start cleaning up old content on my site, GameSkinny.com. We are a news and reviews site for video games. As such, we have a wide swath of content types: news, reviews, lists, opinions, and features. We have staff writers and freelance writers and have been around d since 2012.

Some of the content, as has been suggested, could be consolidated, such as X beat horror games for X year, etc. However, some pieces, such as some news pieces for example, can’t really be updated as they were, say, on a release of a get or a the closing of a studio, etc. Some have very few total views, say in the 100s. Should these be culled completely or updated as best they can be?

Over the past two months, we’ve updated our interlinking strategy as well, writing to get content and writing copy with interlinking to other articles in mind. I think it is too soon to say if the strategy has been working, but it seems logical.

I suppose my main question is this: as a media site that has an extensive archive, what would be the best way to cull underperforming articles so Google doesnt penalize the site? Is it worth culling old, out of date news pieces, for example? What would you suggest? Thanks!

Hi Jonathan,

There’s a lot here! And without more information it’s tough to give one specific recommendation.

The pages you mention certainly could be worth pruning. There are sometimes a benefit to older news style pieces – if people are searching for that type of historical information – if they aren’t, they are probably good candidates fro pruning. You could also try to breathe new life into the pages by updating them, adding content, consolidating similar pages into one and updating the LastUpdated meta tag when you do. You could also “topic bucket” the pages – perhaps by game name – and build ‘hub’ style pages for specific games or groups of games. This would help the pages become more crawl-able, and in conjunction with things mentioned above, could help drive more traffic to those pages. But if the articles are old, out of date and nobody is searching for the content they contain, yes they are likely worth pruning.

Be sure the pages don’t have links before you prune! Hope this helps.

Chris

Hi Chris,

Does this tool work only on PHP based ecommerce sites? I am trying to run against java based ecommerce sites(on platforms like IBM WebSphere, Hybris, ATG) and it doesnt seem to work as expected. Thoughts?

Thanks,

NP

the CRUFT Finder tool is now working again. Sorry for the hiccup!

it should work with any ecommerce site regardless of language or platform. That being said I can see the tool is currently broken. We’ll have our devs take a look and make a fix as quickly as possible.

Hi Chris

I run a page where we sell self-written articles by users. We have around 26.000 papers online now, and I see of course that google does not like ALL of them. I would say that about 3000-4000 do not receive visits from google. We look for unique content and plagiate check everything of course, but some content is just not very interesting in googls eyes (sometimes not interesting just now, but later)

Do you think I should simply NOINDEX pages that for example did not get a view in the last 3 months?

I sometimes see, that a page that had no interest/visitors for lets say 2-3 years, suddenly receives a lot more visitors from google (lets say the thematic becomes interesting again, like an article about the “2004 hurricane” so I am nervous about “DELETING” these articles completely. I see the internet also as an “archive” for older things, not just an archive about the last 2-4 years. (website is 12 years old now)

Any suggestion what to do with pages that have unique content but receive no visitors for several months/years? I could NOINDEX the article page, but could put them together on a “group of more articles”, where 40-50 of them are put together (but that overview page does not make sense at all, just overview pages of noindexed articles)

Hi Bodo,

Here are some thoughts and questions…

– How are you making money, from advertising? Do you sell products / services? Have these 3-4k pages that do not receive any traffic generated you any profit?

– What guidelines are users given for these self-written articles? Is keyword targeting considered at all? Can the keyword targeting on the posts be improved?

– Not knowing what the site and articles are about, or how the site is structured, one possibility could be to “topic bucket” the articles, putting them into categories and sub-categories. Then, build out pages with unique content that target these topics and link to all the related articles.

(copied from above reply to John)

A few thousand low/thin quality content pages sounds ripe for the pruning. There may be value in a couple other options…

– Republishing posts with enhanced content and better keyword targeting

– Consolidating similar posts into a more authoritative post

– Combine multiple ‘date based’ URLs into more of an evergreen page, for example, if there’s a post about the XYZ conference from 2015/2016/2017 etc. making it more evergreen could build the strength of the page(s) over time

– Remove (404/410/noindex); noindex is a popular option for eCommerce sites where there are many products that aren’t receiving traffic, or maybe have the manufacturers description and need rewritten – but the product is still for sale. If the page doesn’t have links and isn’t driving traffic, noindex doesn’t make as much sense. Just get rid of it.

Let me know what other questions you have. Hope this helps!

Chris

Hi there,

I have a client with a few thousand blog pages of thin content, 300 words or less. According to GA the vast majority do not get any traffic (or something like one visit a year).

The client is an agency with only about 50 substantive pages, and thousands of “deadwood” blog posts, some dating back 10 years.

About 1% of these thin blog pages get minuscule traffic and yet another 1% have a small handful of links pointing at them, but none of any quality

So I’m just curious if you think it makes more sense to noindex all of these thin pages or to remove and 410 them.

Might be nice to retain a few of the pages that have minimal activity but I don’t think it will reallly help or hurt much.

Would you foresee any issues of taking this agency site from a few thousand indexed pages down to about 100 or less?

Greatly appreciate your thoughts here and keep up the quality content!

Thanks!

Hi John, good question. Typically we pull a years worth of GA data AND backlink data from AHrefs or some backlink tool. It’s important to ensure any pages you’re going to prune – whether 404/410 or using the noindex tag – don’t have backlinks pointing to them and haven’t driven (much) traffic / revenue.

A few thousand low/thin quality content pages sounds ripe for the pruning. There may be value in a couple other options…

– Republishing posts with enhanced content and better keyword targeting

– Consolidating similar posts into a more authoritative post

– Combine multiple ‘date based’ URLs into more of an evergreen page, for example, if there’s a post about the XYZ conference from 2015/2016/2017 etc. making it more evergreen could build the strength of the page(s) over time

– Remove (404/410/noindex); noindex is a popular option for eCommerce sites where there are many products that aren’t receiving traffic, or maybe have the manufacturers description and need rewritten – but the product is still for sale. If the page doesn’t have links and isn’t driving traffic, noindex doesn’t make as much sense. Just get rid of it.

But to answer your overarching question – these pages that aren’t driving any traffic aren’t providing the site with much value, and very well could be holding the ~100 quality pages back.

I agree, it could be worth keeping some pages that drive traffic – and I would make sure those visits aren’t leading to leads/revenue – if so you’d want to keep them… it just depends on how much traffic you’re willing to give up. Hope this helps!

I am not sure whether to use a redirect or not, but I am going to consolidate some pages on my website.

Hi Janice,

It’s usually a good idea to 301-redirect URLs that have been consolidated to the URL to which you consolidated – especially if those pages were indexed and/or received any traffic. Don’t forget to also update your internal links so they don’t point to redirected URLs!

Hi there, did you update the article mentioned in this reply comment?

Thanks

Chris, Thanks for the article. I had a question about low-value pages and whether they should be kept. Some argue that the larger a website, the better. With user engagement now a ranking factor, it seems logical that poor performing pages be removed.

Hi John,

As with many things SEO, I’d say it depends on a number of factors. Also ‘removing’ a page can mean a couple different things. For example, eCommerce sites wouldn’t want to 404 product pages if the product was still in stock. Low performing product pages are typically candidates for a noindex tag. There could also be the case of near-duplicate product pages if a product were available in multiple sizes and/or colors, and the CMS didn’t have the proper functionality to combine multiple URLs into one. In this case we might use canonical tags if there weren’t sufficient search volume to justify different pages for every size/color.

If the poor performing page is strategic content (blog post or similar), we’ve had success by using the “Remove, Improve or Consolidate” strategy. If there are multiple pieces of content on the same or similar topic, they can sometimes be consolidated into a single, more authoritative post. We also often improve posts, which include things like improving keyword targeting and expanding the length of the content (don’t forget to update the DateModified meta tag). Finally, we have rolled out a strategy to 404 pages that just aren’t performing (little to no traffic over a period of time) – for one client we 404’d 90% of their blog posts that received little traffic and saw a boost in rankings, traffic and revenue to their foundational content (category & product pages).

Hope this helps!

Hello Chris, about your 404 strategy, I thought that one should 410 these pages with no traffic, to basically tell Googlebot to not visiting them again? I’m about to do some much needed pruning on my website, with a mix of noindex and 400 errors. My website has 800 pages and I estimate I should delete 200 pages and noindex 100 more. Thank you!

410 would potentially be better if the pages are never coming back.

Its extremely good and very helpful for me.Thanks for sharing this great post.

Hi Chris, I have been exploring the idea of pruning useless pages and reducing index bloat.

I had a question. Once you have identified such pages, do you simply add a noindex tag to them? Are these pages deleted? Redirected?

BTW, really nice article- I’ll be using the Cruft Finder tool 😉

Hey Gurbir… Good question. There are lots of ways to “prune” a page once you’ve identified it isn’t a good current target for your SEO efforts. Our older article on Moz about pruning eCommerce sites is a good place to start. It also contains a link to again a bit older but still relevant overview of our content audit process. We plan to update both these articles soon.

no it doesn’t sound thin, but it still might be low quality, irrelevant, etc.

Hi Rob,

We typically choose 404 when there isn’t any valuable content on the page to merge with another page. Otherwise it probably makes sense to merge pages and 301 redirect.