Editor’s note: This article was first published in 2020 and has been updated for accuracy and to reflect modern practices.

Today, it seems the pay-per-click (PPC) climate is all about platforms rolling out automation. As ad platforms gain more and more data (and, in most situations, keep it to themselves), their AI machine learning is getting better and better at predicting the best strategies for ad campaigns.

So, is it really time for your brand to go all-in on automated bidding strategies? Or is manual bidding still important for maximized results?

That’s the question we asked ourselves — and, being the data nerds we are, we decided to run a test to find out.

In this case study, we’ll explain the different experiments we ran to test Smart Bidding vs. manual bidding for one of our clients, as well as the results we discovered.

Spoiler alert: Manual bidding beat automated Google Ads bidding in all major metrics measured across desktop, tablet, and mobile — including revenue, transactions, eCommerce conversion rate, and more.

Background: Gaining the Client’s Trust

Here at Inflow, we follow a dual paid search advertising approach: using automated insights to inform our manual campaign adjustments. In our opinion, it’s the best of both worlds — without leaving clients’ daily budgets to run out of control under full automation.

But, because our jobs are to improve performance for those clients in any way we can, we’ll never shy away from setting certain factors on “smart” when it makes sense to do so. The only way to discover that? Run some tests.

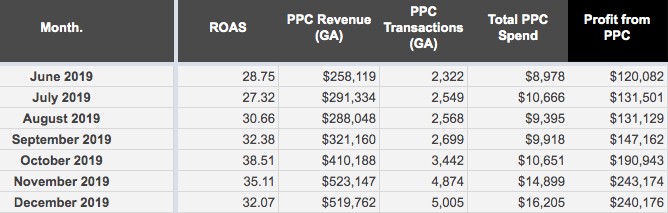

For this case study, we struck a deal with an existing client, who was then only using our conversion rate optimization services. The brand would give us three months to implement and optimize their eCommerce Google Ads strategies (based on opportunities we uncovered during an initial account audit). If we increased profits enough by the third month to cover our service cost, they’d sign on for an ongoing partnership.

In July 2019, we began our work by implementing our proven three-tiered Shopping structure. We reorganized search campaigns by product type and narrowed the account to spend on areas driving the most revenue.

By month one, we increased profit by $11,000. By our deadline of month three, we pushed that even further to $27,000 in profit.

Our trial work gained the client’s trust, allowing us to experiment more after the long-term contract was signed — leading to our manual bidding vs. Smart Bidding test.

Automated vs. Manual Bidding in Google Ads: The Experiment

Our team frequently receives recommendations from Google reps to further automate our accounts. After all, more automation means more money under Google’s control.

As a reminder, Google Ads’ automated strategies include:

- Target Impression Share

- Target CPA

- Target ROAS

- Maximize Clicks

- Maximize Conversion

- Maximize Conversion Value

Typically, we ignore these recommendations, especially for clients for whom we’re already generating a great return on ad spend (ROAS). With this client’s Shopping return sitting at 20x, we didn’t see the need to fix what wasn’t broken.

But, in the name of continuous improvement and learning, we decided to run the experiment on the client’s search campaigns, instead.

Parameters of the Experiment

For this experiment, we ran a Target ROAS test, split 50/50 between the control (manual bidding) and Google’s automation. We also switched the attribution model in Google Ads to data-driven.

We set the test to run for three weeks, to make sure the algorithm had enough time to get “dialed in” to our accounts. The end of the experiment would occur right before Black Friday and Cyber Monday — so we could apply the winning strategy (whichever it was) at 100% for those two important sales days.

Results: Week 1

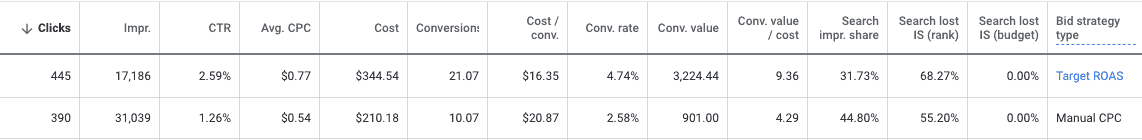

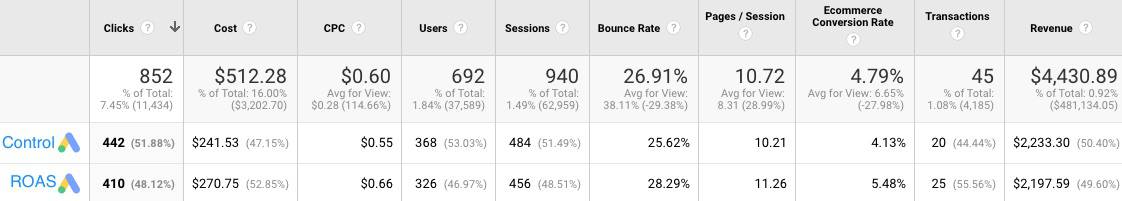

In the first seven days, the Target ROAS campaign jumped ahead to a surprising lead in Google Ads (formerly known as Google Adwords).

But, when we took a closer look in Google Analytics, the first week seemed to be a wash.

This discrepancy made sense; we switched the attribution before starting the test, and the machine was optimizing toward the new attribution model.

But would this translate into more revenue in Google Analytics? Would it continue to get smarter and better? Could our manual adjustments make up the ground?

Results: Week 2

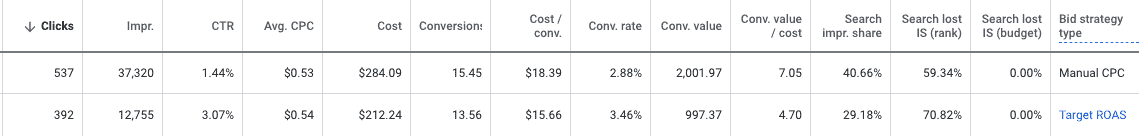

After the first week, we decided to make some bidding adjustments to our control (manual) campaigns. In return, our manual cost per click (CPC) campaign bounced back in Google Ads and slightly beat out the Target ROAS campaign in terms of conversion value and ROAS.

Once again, the Google Analytics results were really close. Our manual group had a slight edge in revenue and spend, but the Target ROAS campaign had five more transactions. Got to love that average order value swing!

Heading into Week 3, both the manual and Smart Bidding strategy had “won” a week in Google Ads, and the two were nearly neck-and-neck in Google Analytics. It looked as if it were going to be a photo finish (which, for the record, I was already considering a win).

The Google rep was certain his algorithms were going to crush our manual adjustments, but our campaigns were getting better performance as time went on.

Final Results: Week 3

In the final week, it all came down to the wire: Man vs. Machine.

And it wasn’t even close.

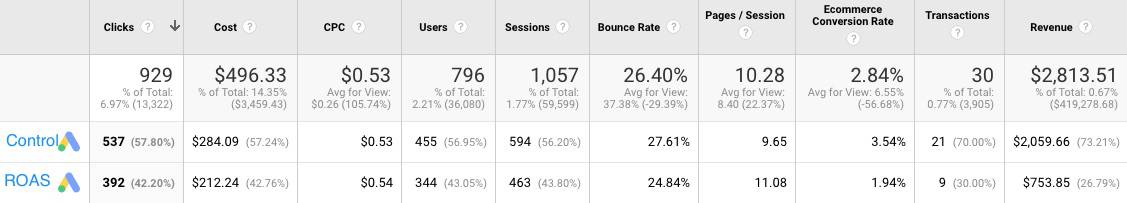

In Google Ads, our manual campaign had more than double the conversion value of the automated campaigns.

In Google Analytics, our manual campaign had nearly triple the revenue and triple the transactions of the automated one.

Coming around the last corner, our campaign took off, while the automated campaign sputtered. This was surprising; we assumed that as the automated campaign received more data each week, it would also get more efficient.

In this experiment, it simply didn’t happen.

A Closer Look at the Results

Most of the time, numbers don’t lie. But the way you look at them can result in different conclusions — which we saw in this experiment.

Google Analytics

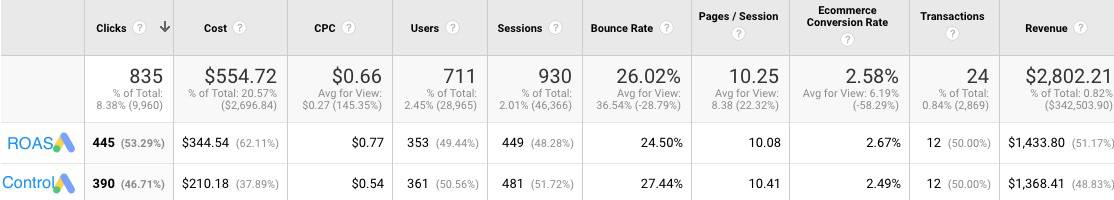

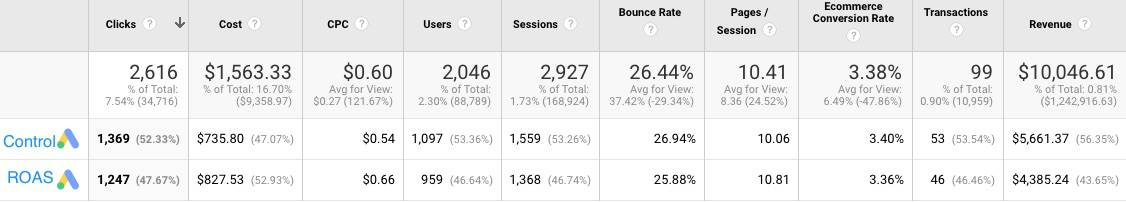

In Google Analytics, the control beat the automated test in:

- Revenue

- Transactions

- eCommerce conversion rate

- Cost

- ROAS

Manual bidding was the clear winner here.

Google Ads

Armed with data-driven attribution, Google Ads was spinning a different tale (which was why our rep immediately declared victory).

In Google Ads, the automated campaign beat our control in:

- Conversions

- Conversion rate

- ROAS

The Common Mistake: Taking Results at Face Value

Now, we’re finally getting to the point of this case study:

Don’t just take results at face value.

Instead of a solid conclusion, our results instead brought up many questions for our team:

- Why did we lose in Google Ads?

- What did the Target ROAS campaign do better?

- Even if automated campaigns actually won and outperformed the manual bidding, wouldn’t you want to know why or how — so you can improve what you are doing?

So, we took a closer look at the conversion data, by breaking out the campaigns by device:

Looking at this data, the manual bidding campaign out-performed the automated control campaign in two areas:

- Desktop

- Tablet

Mobile phones performed much worse in our manual campaign — and understandably so. Up until this point, our team hadn’t been targeting mobile for search campaigns, based on historical low performance and client information.

Average CPC on mobile phones was about $0.16, much lower than our manual computer and tablet bids. Again, this made sense: Our manual bidding strategy was to maximize desktop traffic within the budget and then expand to mobile.

The experiment clearly revealed we needed to move up our plans for the focus on mobile.

After realizing our control campaign had won on desktop and tablet (the two areas we focused on) and had only lost overall because we hadn’t been targeting mobile, we chalked up our win, and the Google rep conceded.

The Next Step: Segmenting Mobile Traffic

After seeing the opportunities awaiting us, we ended up breaking out mobile traffic from the control campaign into its own new campaign. This way, we would have more control over set bids, budgets, and keywords, as these tend to behave differently depending on the device.

The results after the first month:

- Revenue increased by 46%.

- Mobile transactions doubled.

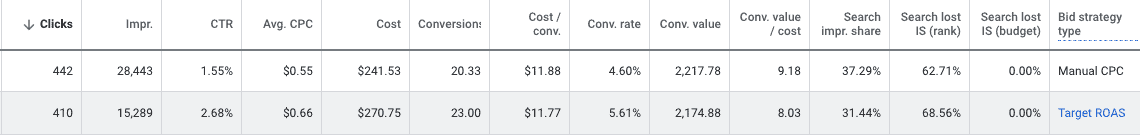

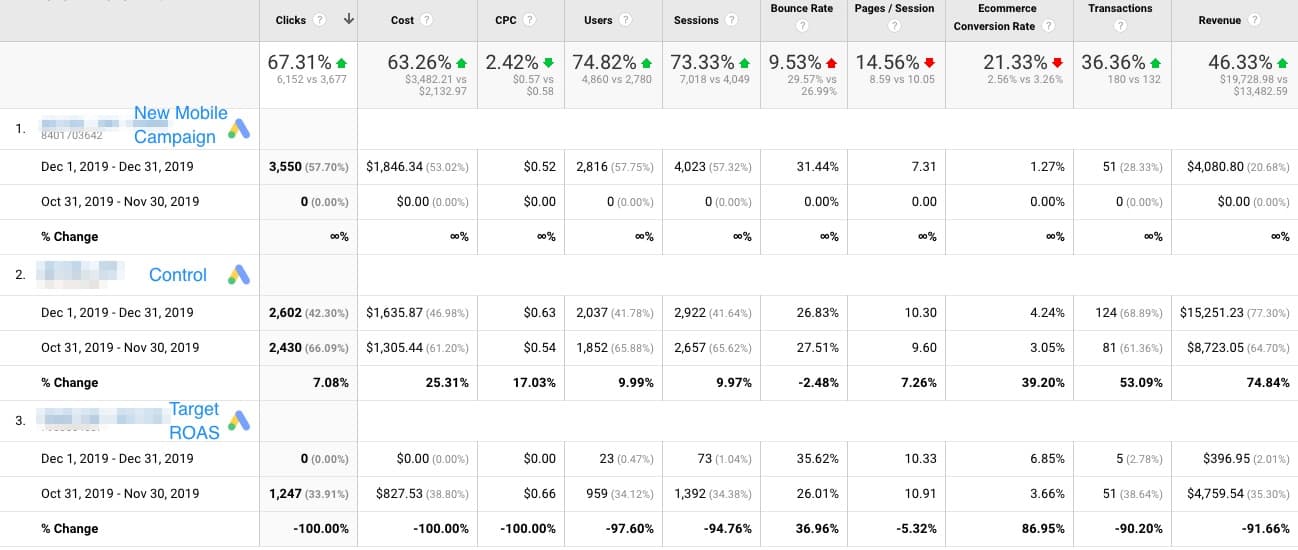

Results: Smart Bidding vs. Manual Bidding After Segmenting Mobile

Now that the control campaign (manual bidding) focused on the higher-converting desktop traffic, our results continued to improve:

- Revenue increased by over $6,000, with only $300 more in additional ad spend than the test campaign.

- eCommerce conversion rates increased by nearly 40%.

In June 2020 — after months of adjustments and optimizations — ROAS for the mobile campaign using Google Analytics was more than 5x, compared to the 2.21x ROAS captured in the screenshot above.

It would be well worth another test now to see how the mobile campaign does with a Target ROAS strategy, as it did well with that type of bidding before.

But, from now on, we’ll definitely be rooting for manual bids and adjustments over the machines’.

Conclusion: Take Automation With a Grain of Salt

Obviously, Google wants you to use automated bidding in your paid search campaigns. They want to reduce friction between you and the ad platform, as well as save you time managing your ad accounts. (And, with so many levers to keep track of, it’s easy to get overwhelmed.)

But we strongly recommend against fully automating your Google Ads campaigns without substantial testing and comparison.

When it comes to eCommerce PPC campaigns, there’s a time and place for both automated and manual strategies. Continuing to test, understanding when manual levers are essential, and managing the inputs you can provide to improve automation are all essential strategies eCommerce PPC marketers need to keep in mind when answering the man vs. machine question.

Bottom line: Often, humans are better at monitoring the nuances of campaign management than machines. But that may not always be the case! As with any digital marketing strategy, continue evaluating platform offerings and testing your campaigns to maximize your results over time.

If you’d rather have an expert do it for you, our PPC strategists are here to help. Contact us anytime for a free Google Ads account audit and proposal, based on your unique business needs and goals.

Until then, check out our other resources for optimizing your PPC campaigns:

0 Comments