Performing a content audit to determine which pages should be improved and which should be removed/pruned from Google’s index is an effective and scalable SEO tactic that Inflow has written extensively about in publications like Moz, the Oracle blog, and blogs by Kevy, Kapost, Shopify, Lemonstand, BigCommerce, and more. Part of our eCommerce Marketing Playbook, the Cruft Crusher play is an SEO tactic that uses the content audit process to remove cruft from Google’s index, thereby improving the overall quality of the domain, as well as internal linking factors like PageRank distribution.

About the Play

The word “cruft” is a term used by developers to describe superfluous code. In this case, cruft is applied to URLs indexed by search engines. It is quite common for us to see a very long tail of indexed URLs that provide little or no value. It is also likely that many of these could be doing more harm than good to your search engine rankings. The Cruft Crusher will help you do the analysis necessary to make informed decisions about which URLs to safely de-index.

Who’s It For?

SEO Professionals

Tech-Savvy Content Marketers

When to Use It:

This play is a safe, effective bet that nearly always works on large eCommerce websites. The results are easy to track, and it is easily rolled back in the unlikely event that you would want to do that. However, best results come from implementing the Cruft Crusher on domains will thousands or more pages indexed by Google.

How to Execute The Cruft Crusher

- Crawl all indexable URLs

- This is your content inventory

- We typically use Screaming Frog

- Gather additional metrics for each URL

- URL Profiler is a good tool for this

- SuperMetrics plugin for Excel or Google Sheets is another great option

- Put it all into a dashboard and begin analysis

- This is your content audit

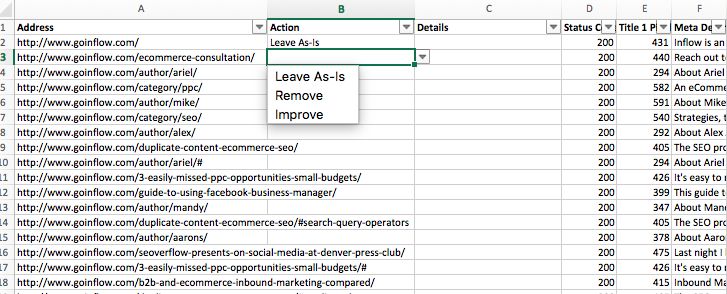

- Create a column in the spreadsheet that allows cell input only in these formats:

- Leave As-Is

- Improve

- Remove

At this point, you should be viewing something similar to the spreadsheet below:

- Sort by landing page visits from organic search

- Verify that your data is accurate by manually reviewing (spot checking) in your Analytics program (e.g., Google Analytics).

- Mark any URL with extremely few or no visits from organic search within the last year as a candidate for immediate removal from Google’s index

- Provide these instructions:

- The Robots meta tag in the HTML header of these pages should read as follows:

<META NAME=”ROBOTS” CONTENT=”NOINDEX,FOLLOW”>

- The Robots meta tag in the HTML header of these pages should read as follows:

- Check post implementation for the following common mistakes:

- Use the browser’s search function in Source View or Chrome Inspect mode and type: <meta name=”robots”

- See if there is more than one result on the page for that search

- Technically speaking (though messy and not recommended), there can be multiple robots meta tags, but there should only be a single directive on whether to index or noindex, and a single directive on whether to follow or nofollow

- It is unlikely, but you should also check the HTTP header response for an X-Robots-Tag that gives conflicting directives

- Use the browser’s search function in Source View or Chrome Inspect mode and type: <meta name=”robots”

- Once implemented, notate the Analytics timeline for easy validation later

- After about a month, you should be seeing data in Google Search Console indicating a reduction in pages indexed, while also seeing an improvement in other metrics.

The Cruft Crusher Resources:

How to Do a Content Audit

Content Audit Toolkit Download

Cut the Cruft, MozTalk Denver

Most Effective Way to Improve Sitewide Rankings

Content Audit Strategies for Common Scenarios

Wow! This was pretty interesting Mike. Thanks for sharing! I was wondering, how is it different from disavowing links?

This is for internal content Emmerey, whereas disavowing links is done on external links.

Ah-ha! These are very helpful. Thank you, Mike!

Awesome post! The steps you’ve outlined make this process very actionable.

What’s the best way to get a tag on certain product pages if I’m not able to add via the admin panel in BigCommerce?

Ready to get the pruning underway. 🙂

Hi Ethan,

In a pinch you could try adding the tag via Google Tag Manager OR you can use a noindex directive in the robots.txt file (different from blocking the page). Here are links to articles about each option https://moz.com/blog/seo-changes-using-google-tag-manager https://www.deepcrawl.com/blog/best-practice/robots-txt-noindex-the-best-kept-secret-in-seo/

Cheers,

Mike