Do you want to use A/B testing to increase the conversion rate on your eCommerce site?

Before you start running one of those tests, it’s a good idea to make sure that you set it up correctly.

A rigorously-run test can increase conversions, but if the parameters are flawed then the decisions you make based on the test could be too.

In this post, we’ll outline the worst eCommerce A/B testing errors that we see online stores make. Use this as a reference to make sure that any A/B testing you do returns accurate results. That way you can make better data-driven decisions that actually increase conversions.

Note: Want our CRO experts to do A/B testing to increase the conversion rate for your eCommerce business? Learn more about our services and get in touch.

What Are the Worst eCommerce A/B Testing Errors?

We’ve written in the past about the most common reasons A/B tests don’t perform well. Namely:

- They aren’t based on real insights (we wrote about this here).

- The tests aren’t prioritized by potential (here’s how to prioritize your website testing).

- The test ideas are not validated with analytics or other sources (This is a checklist for a quick Google Analytics audit).

Here, we’re outlining the worst technical errors that can invalidate your results. These are:

- Technical issues with the actual test

- Making site changes during the test

- Traffic source changes

- Conflicting tests

- Not accounting for user and site-specific factors

If you are going to put time into doing A/B testing to improve your site, it’s important to take the specific things about your site into account.

These are the 5 eCommerce A/B testing mistakes to avoid (beyond just setting up your analytics goals) that we commonly see while helping our clients with their conversion optimization.

#1. Technical A/B Testing Issues

Here are the things to consider when setting up an A/B test (whether you’re using Optimizely, VWO, or another platform) to make sure it yields valid and actionable results.

Didn’t Exclude Return Visitors

If people are experiencing 2 different versions of your eCommerce website, a test will be invalid. We often see this issue happen with returning visitors after a site changes their design, layout, or other elements.

A ‘Negative Response’ is the possible outcome created when return visitors to the site see the new treatment and are now lost (i.e. navigation change) or confused (i.e. page layout test). The user now has expectations about the site that we might not be meeting with the new treatment—even if it is better.

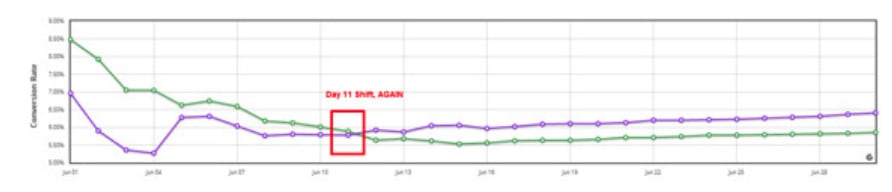

For instance: Below is a familiar pattern we see when the test variation (purple) overtakes the control (green). This often happens when a site’s returning visitors are allowed into the test. These returning visitors prefer the control because it has a “continuity of experience” (it’s the version they’re used to).

This results in making the variation appear to perform poorly until the initial group of returning visitors exit the test. In the test below, it took roughly 12 days for this returning visitor bias to abate.

Many tests do not run long enough (see below). If returning visitors are excluded, the true result—a big win—will never be seen. Even worse, you may end up implementing a losing variant and harming sales.

Note: For businesses with loyal client bases who need to know how a treatment will impact their existing users, you can remove returning visitors from the data after the fact.

Didn’t Run a Test Long Enough

One of the most common ways we see eCommerce businesses run tests improperly is not giving them a chance to run for long enough. The reasons for this vary from not knowing better to succumbing to the pressure to get results quickly.

Whatever the reason, not running a test long enough will rob you of the truth, so you might as well have not run the test in the first place.

We’ll revisit how long to run these tests in a moment.

Run a Test with Enough Participants and Goal Completions

The more variations a test has, the more participants it will need. Your sample size needs to be large enough to demonstrate user behavior.

Consider 100 conversions per variation to be a minimum, and only after the test has run long enough (see above) and other criteria has been met (see below).

Don’t Turn Off Variations While Testing

Turning off a variation can skew results, making them untrustworthy.

For instance: Let’s say you have four total variations (three treatments and the control). Each variation receives 25 percent of traffic. After 10 days, one treatment is turned off and from that point on, each variation receives 33 percent of traffic. Then you again turn off another variation, leaving each remaining variation with 50 percent of the traffic.

The example below shows how when the green variation was turned off, the control improved, just like it did at the start of the test when it benefitted from some people buying right away (the control was for a free shipping offer):

The control again lifts off when the pink variation is turned off showing the control (orange) improve for a third time when the mix of new and returning visitors shifts to include more new visitors. The variation (this time the control) disproportionately benefits since it does well at converting the first time buyers who saw the free shipping offer.

This graph would look very different if the control never saw those three bumps in conversion rate. Because the control is the baseline variation, the result of the winner (blue variation) would be more clear and confident, and the test would not have had to run so long.

The above scenario is extremely common, and at a surface level seems benign, however, the reality is that the differences in the variations themselves may create a case where the test is corrupted by changing the weight of the remaining variations.

Often a variation is favored by different types of buyer mindsets, such as a spontaneous shopper vs an analytical one. If one variation is preferred over the other, changing the weight of the remaining variations will result in the variation favored by spontaneous people to suddenly improve. The other variations, favored by more analytical people, will not see as much improvement until their buying cycle has concluded, perhaps days or weeks later.

Since we never know who likes a variation for what reason (Was it the hero image? The testimonials? Product page? Overall user experience?) the safest thing to do is not to eliminate any variations.

Custom Code vs. Test Design Editors

After trying to set up a few tests via any test design editor, you may find that the test treatments do not render or behave as you expected across all browser/device combinations.

While design editors hold great promise for “anyone” to be able to set up a test, the reality is there is only a narrow range of test types (i.e. text-only changes) that can be done through a test design editor alone. Custom code is required to have it work well and consistently across browser types and versions.

The best practice is to write custom code because most modern websites have dynamic elements that visual editors can’t identify properly. Here are some specific cases that visual editors can’t detect:

- Web page elements that are inserted or modified after the page has been loaded, such as some shopping cart buttons, button color, Facebook like buttons, Facebook fan boxes and security seals like McAfee.

- Page elements that change with user interaction, such as shopping cart row changes when users add or remove elements, reviews, carousels, and page comments.

- Responsive websites that have duplicated elements, such as sites with multiple headers (desktop header is hidden for mobile devices and mobile header is hidden for desktop computers).

In the beginning, you may be able to avoid running complex tests that require custom code. Eventually, you will graduate to a level of testing that demands it. Know that this requires a front-end developer to set up the tests that you will want to run.

Run the Test at 100% Traffic

Today, many purchases online involve more than one device or one browser (i.e. researching on a smartphone, then purchasing on a laptop).

However, test tools are limited to tracking a user on a single browser/device combination. This means that someone who sees one variation of a test on mobile may come back to purchase on desktop and be provided another variation.

Showing a variation more often than another will give it the advantage since it is more likely to be seen with continuity by users who switch from device or browser. This is called “continuity bias.”

To avoid continuity bias during the testing process, we recommend you run tests at 100 percent of traffic and split that traffic evenly between variations.

When less than 100 percent of traffic is sampled for a test, the result (in today’s cross-device world) is that the control will be served more often than the variations, thus giving it the advantage.

For instance: A user visits your site from work and is not included in a test because you are only allowing 50 percent of people to participate. Then, that same user goes home later in the buying cycle and gets included in the test on their home computer. The user is then more likely to favor the control due to continuity bias.

Side note: This may be a good time to look at your past results of tests that were run with less than 100 percent of user participation and see if the Control won more than its fair (or expected) share of tests.

Equal Weighting of Variations

For the same reasons as mentioned above with running a test at 100 percent, you also want to ensure that all variations are equally weighted (i.e. testing four variations including the control should see 25 percent of traffic go to each). If the weights are not equal there is a bias—as outlined above.

Test Targeting

Test results are easily diluted (test will have to run longer) or contaminated by not targeting the test to the right audience. The most common issues of inappropriate test targeting are:

- Geo (i.e. including international visitors in a test that is USA specific)

- Device (i.e. including tablets in a mobile phone test)

- Cross-Category Creep (i.e. test for Flip Flops spreads into all Sandal pages)

- Acquisition vs. Retention (i.e. including repeat customer in a test for first-time customers)

If the right audience and pages are not targeted, then it will take much longer to see any significant results with confidence due to the noise of users who don’t care either way. That test result will indicate that the change is not significant, leaving you to stop the test and not gain the additional sales.

These are the most common technical reasons we see invalid tests. There are some other good habits we recommend though.

#2. NO eCommerce Site Changes During Testing

As a general rule: Avoid making other site changes during the test period.

This will cause you to see a statistical result from the test without knowing what to attribute it to.

We often see eCommerce stores that launch a website redesign while they are in an active test period which causes issues.

For instance: If you are testing a trust element like McAfee’s trust seal, avoid changes that may impact the trust of the site, including:

- Site style changes

- Other trust seals

- Header elements (like contact or shipping information

- Or any other site-wide “assurance” elements (i.e. chat)

The same line of thinking applies to other changes. If you are testing a pop-up window, don’t make a change to the site style, other opt-in boxes, etc.

When split testing, it’s usually best to test one element at a time. Making site changes during a test makes that ideal a lot less feasible.

#3. Traffic Sources Change and Muddy Conversion Data

In our experience, the different sources of traffic to your website will behave differently.

When there is a change in the distribution of traffic sources (i.e. paid search increases), test results will be unreliable until the test participants brought in have had a chance to go through their entire buying cycle.

For instance: Paid search visitors may be less trusting and less sophisticated when it comes to the web. This traffic source often responds well to trust factors like trust seals.

Increasing paid traffic during the test may result in a sharp increase in conversions. But that increase is not sustained as the test continues for a longer period and the number of non-spontaneous visitors get factored into the results.

If one version performs better for one traffic source but another traffic source starts getting mixed into the test: you’re seeing the mixed traffic response because of the change. This can make it hard to attribute the conversion increase you see to one source of traffic or the other.

#4. Conflicting A/B Tests Spoil Attribution

It’s easy to run more than one test at a time, however, tests may conflict with user impact. It is common to see the results of one test change when another test is started or stopped. This is typically the result of the tests sharing the same purchase funnel, or impacting the same concept.

Avoid running conflicting tests. If you are running more than one test, do a bit of analytics work to see how many people will be affected by both tests (i.e. users common to the two pages involved in the separate tests).

If it’s more than 10 percent, then you will want to strongly consider how the two tests impact the user’s single experience. By using common sense and good judgment, you and your team will be able to estimate which tests can be run at the same time.

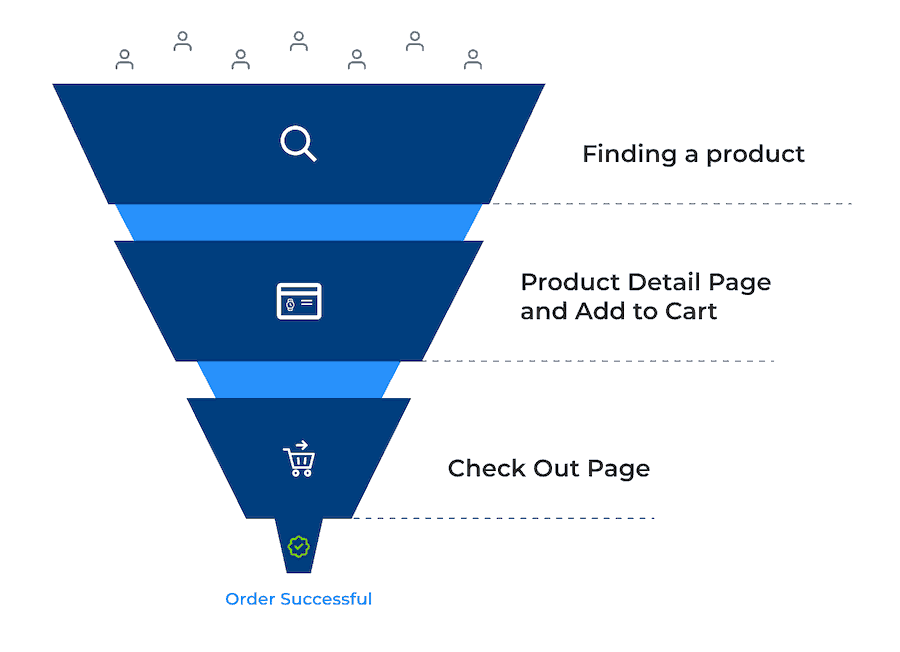

Here, we typically recommend breaking eCommerce sites into 3 “funnels” or sections:

- Top-of-funnel is finding a product

- Mid-funnel is when the user is on the ‘Product Detail Page’ and ‘Adds to Cart’

- Bottom-of-funnel is Cart through a checkout page conversion

You shouldn’t be running more than one test at a time in any one of these funnels, and KPIs should reflect the goal of each “funnel.” Especially when there is another test running in one of the other funnels.

#5. Not Taking User and Site Specifics When A/B Testing

Every eCommerce site is unique. So when you do A/B testing for eCommerce, take those particular factors into account.

Clients who work with us get individualized recommendations for their websites. Here are some important general guidelines:

1. Segment Traffic

When judging if you have enough goal completion, don’t forget to consider segmentation on a user persona level.

For example, an eCommerce store selling school supplies will have a big split between classroom teachers and parents who shop on the site. If the treatment only targets one group, or if it might impact each group differently, it’s important to take that into account.

2. Test Against the Buying Cycle

When looking at test results, a test must be run and analyzed against its buying cycle. This means testing a person from their very first visit and all subsequent visits until they purchase.

If you know that 95 percent of purchases happen within three days of the user’s visit, then you have a three-day buying cycle.

Your test cycle will be the number of weeks you test (you want to test in full-week periods) plus the full length of your buying cycle added on so the last participant let into the test has an adequate chance to complete their purchase.

3. Count Every Conversion (or at Least Most of Them)

If a participant has entered a test, their actions should be counted. This may sound obvious, but correct attribution is seldom done well, and this results in inaccurate testing.

In order to do this right, it’s important that all visitors are given the chance to purchase after entering a test. If a test is just “turned off,” participants in that test who have yet to purchase have been left out.

Since it is common to see one particular variation do well with returning visitors, leaving out these later conversions will skew the test toward the variations that favor the less methodical type people.

4. Determine Your Site’s Test Cycle (How Long to Run a Test)

You likely have been involved in discussions about how long a test should run. The biggest factor in how long to run a test is your site’s test cycle.

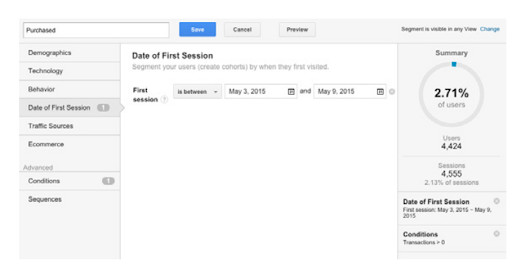

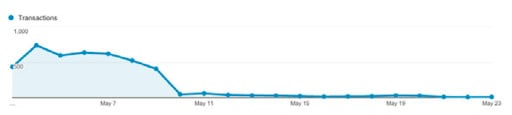

To find your site’s test cycle in Google Analytics, simply start with a segment like the one below where you define that you want to view only users who had their first session during a one-week period. Then set a condition where transactions are greater than zero.

This type of segment will tell you when people whose first visit was that week eventually purchased on your site.

You can start by looking at a range such as two months, then work backwards to figure out when 95 percent of the purchases in that two months were. In the example below, the site has a three-week test cycle because 95 percent of purchases for the two months occurred in the first three weeks from the beginning of the period you started tracking purchases:

You may be wondering, “Why 95 percent?” This is a simple rule of thumb and, from experience, we have rarely seen the final 5 percent of purchases change a test’s conclusions, however, we have seen the last 10 percent do so.

5. Use 7-Day Cycles

When testing, you most likely have to test against a full week cycle. This is because people often behave differently during different days of the week.

For instance: If your site sells toys for small children, your site’s reality might be that a lot of research traffic occurs on the weekend when the children are available for questioning (i.e. “Hey Ty, what’s the coolest toy in the world these days?”).

Another reality for a toy site might be that often the “Add to Cart” button does not need to get hit until Tuesday evening, given that a lot of toys are not needed until the weekend when birthday parties are typically held. A test run from Wednesday through Sunday (five full days with lots of data) is still not enough.

The reality is, almost every eCommerce site (from the more than 100 eCommerce analytics we’ve done test analysis on) has a seven-day cycle. You may have to figure out which days to start and stop, but it’s there because of how user behavior varies throughout the week.

Therefore, if you don’t use a seven-day cycle in your testing, your results are going to be weighted higher for one part of the week than another.

6. Use the “Test Window”

The Test Window are essentially the steps we recommend to avoid skewing a test.

Step 1: Only let new visitors into the test. This way returning visitors later in their purchase cycle will not skew results and potentially set the test off to a false start. Get as many people into the test as possible.

Step 2: Don’t look at the test for a full seven days. If you don’t have a statistical winner at this point (most test tools will tell you the test has reached 95 percent confidence), let the test run for another seven-day cycle and don’t peak.

Step 3: Turn off the test to new visitors once you have a statistical winner (at seven-day intervals). Turning off the test to new visitors will allow the participants already in the test to complete their buying cycle. Leave the test running for a full buying cycle after you’ve closed the window.

Step 4: Report out on the test. To report out on the test’s overall results, you will simply look at your A/B testing tools report. Now, because you used the Test Window, you will be able to believe the results because:

- Everyone in the test had a consistent site experience, spending it in the same test variation (no one seeing the control on a previous visit only to later experience a treatment).

- A full seven-day cycle was used so weekend days and weekdays were weighted realistically.

- Every user (or 95 percent of them at least) was allowed to complete their buying cycle.

Conclusion

If you are going to put time into testing to improve your eCommerce site, everything that you do will be invalidated if you aren’t paying attention to these vital A/B testing factors.

In our effort to be the best eCommerce agency, we study and rank the best eCommerce sites’ conversion rate optimization strategies in our Best in Class eCommerce CRO Report. We use our findings and apply them directly to our client’s sites so that their stores are as optimized as the best of the best (and we have case studies to demonstrate).

That said, every significant site change needs to be tested. And for that, the step-by-step guidelines we’ve shown you here will help ensure you get accurate results.

We know that conversion testing is time-consuming and often overwhelming. If you would like to increase your eCommerce site’s conversion rate, our CRO experts can help with set up and make A/B testing recommendations. Learn more here and get in touch.

0 Comments