Editor’s Note: This post was originally published in November of 2013. While a lot of the original content still stands, algorithms and strategies are always changing. Our team has updated this post for 2021, and we hope that it will continue to be a helpful resource.

For eCommerce SEO professionals, issues surrounding duplicate, thin, and low-quality content can spell disaster in the search engine rankings.

Google, Bing, and other search engines are smarter than they used to be. Today, they only reward websites with quality, unique content. If your website content is anything but, you’ll be fighting an uphill battle until you can solve these duplicate content issues.

We know how overwhelming this project can be, which is why we’ve created this comprehensive guide to identifying and resolving common eCommerce duplicate content challenges. Take a read below to get started, or reach out to our trained eCommerce SEO experts for an extra helping hand.

Table of Contents

What is Duplicate Content?

Thin, scraped, and duplicate content has been a priority of Google’s for more than a decade, ever since the first Panda algorithm update in 2011. According to its Content Guidelines, Google defines duplicate content as:

Substantive blocks of content within or across domains that either completely match other content or are appreciably similar. Mostly, this is not deceptive in origin.

Examples of non-malicious duplicate content could include:

- Discussion forums that can generate both regular and stripped-down pages targeted at mobile devices

- Store items shown or linked via multiple distinct URLs

- Printer-only versions of web pages

According to its Content Guidelines, Google defines duplicate content as “substantive blocks of content within or across domains that either completely match other content or are appreciably similar.”

In short, duplicate content is the exact same or extremely similar website copy found on multiple pages anywhere on the internet. If you have content on your site that can be found word-for-word somewhere else on your site, then you’re dealing with a duplicate content issue.

In our time auditing and improving dozens of eCommerce websites, duplicate content issues typically arise from a large number of web pages that are mostly identical content or product pages with such short product descriptions that the content can be deemed thin, and thus, not valuable to Google or the reader (more details on thin content later on.)

Why You Should Care:

According to Google itself, duplicate content with manipulative or deceptive intent could result in your site being removed from the search results.

However, other types of non-malicious duplicate content can still hurt your organic traffic if Google doesn’t know which page to rank in the SERPs. This is what’s typically referred to as the Google duplicate content “penalty.”

Types of Duplicate Content in eCommerce

Not all duplicate content is editorially created. Several technical situations can lead to these content issues and Google penalties.

We’ll dive into a few of these situations below:

Internal Technical Duplicate Content

Duplicate content can exist internally on an eCommerce site in a number of ways, due to technical and editorial causes.

The following instances are typically caused by technical issues with a content management system (CMS) and other code-related aspects of eCommerce websites.

- Non-Canonical URLs

- Sessions IDs

- Shopping Cart Pages

- Internal Search Results

- Duplicate URL Paths

- Product Review Pages

- WWW vs. Non-WWW Urls & Uppercase vs. Lowercase URLs

- Trailing Slashes on URLs

Non-Canonical URLs

Canonical URLs play a few key roles in your SEO strategy:

- Tell search engines to only index a single version of a URL, no matter what other URL versions are rendered in the browser, linked to from external websites, etc.

- Assist in tracking URLs, where tracking code (affiliate tracking, social media source tracking, etc.) is appended to the end of a URL on the site (?a_aid=, ?utm_source, etc.).

- Fine-tune indexation of category page URLs in instances where sorting, functional, and filtering parameters are added to the end of the base category URLs to produce a different ordering of products on a category page (?dir=asc, ?price=10, etc.).

To prevent search engines from indexing all of these various URLs, ensure that your canonical URL is the same as the base category URL. The canonical tag (ex. <link rel=”canonical” href=”URLGoesHere” />) goes in the <head> of the source code.

| URL/Page Type Example | Visible URL | Canonical URL |

| Base Category URL | https://www.domain.com/page-slug | https://www.domain.com/page-slug |

| Social Tracking URL | https://www.domain.com/page-slug?utm_source=twitter | https://www.domain.com/page-slug |

| Sorted Category URL | https://www.domain.com/page-slug?dir=asc&order=price | https://www.domain.com/page-slug |

It might also be beneficial to disallow crawling of the commonly used URL parameters You should also consider disallowing the crawling of commonly used URL parameters — such as “?dir=” and “&order=” — to maximize your crawl budget.

2. Session IDs

Many eCommerce websites use session IDs in URLs (?sid=) to track user behavior. Unfortunately, this creates a duplicate of the core URL of whatever page the session ID is applied to.

The best fix: Use cookies to track user sessions, instead of appending session ID code to URLs.

If session IDs must be appended to URLs, there are a few other options:

- Canonicalize the session ID URLs to the page’s core URL.

- Set URLs with session IDs to noindex. This will limit your page-level link equity potential in the event that someone links to a URL that includes the session ID.

- Disallow crawling of session ID URLs via the “/robots.txt” file, as long as the CMS system does not produce session IDs for search bots (which could cause major crawlability issues).

3. Shopping Cart Pages

When users add products to and subsequently view their cart on your eCommerce website, most CMS systems automatically implement URL structures that are specific to that experience — through unique identifiers like “cart,” “basket,” or other words.

These are not the types of pages that should be indexed by search engines.To fix this duplicate content issue, no-index these pages and then set them to “noindex,nofollow” via a meta robots tag or X-robots tag. Don’t forget to also disallow crawling of them via the “/robots.txt” file.

4. Internal Search Results

Internal search result pages are produced when someone conducts a search using an eCommerce website’s internal search feature. These pages have no unique content, only repurposed snippets of content from other pages on your site.

For several reasons, Google doesn’t want to send users from the SERPs to your internal search results. As mentioned above, the algorithm prioritizes true content pages (like product pages, category pages, blog pages, etc.).

Unfortunately, this is a common eCommerce issue. Many CMS systems do not set internal search result pages to “noindex, follow” by default, so your developer will need to apply this rule to fix the problem. We also recommend disallowing search bots from crawling internal search result pages within the /robots.txt file.

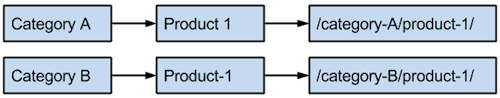

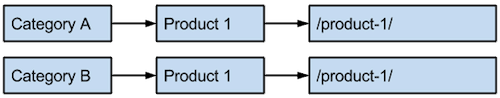

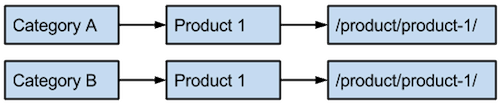

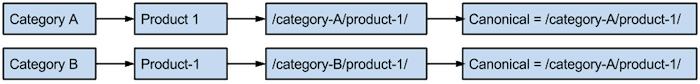

5. Duplicate URL Paths

When products are placed in multiple categories on your site, it can get tricky. For example, if a product is placed in both category A and category B, and if category directories are used within the URL structure of product pages, then a CMS could potentially create two different URLs for the same product.

This situation can also arise with sub-category URLs in which the products displayed might be similar or exactly the same. For example, a “Flashlights” sub-category might be placed under both “/tools/flashlights/” and “/emergency/flashlights/” on an “Emergency Preparedness” website, even though they have mostly the same products.

As one can imagine, this can lead to devastating duplicate content problems for product pages, which are typically the highest converting pages on an eCommerce website.

You can fix this issue by:

- Using root-level product page URLs. Note: This does remove keyword-rich, category-level URL structure benefits and also limits trackability in Analytics software.

- Using /product/ URL directories for all products, which allows for grouped trackability of all products.

- Using product URLs built upon category URL structures. Make sure that each product page URL has a single, designated canonical URL.

- Ensuring robust intro descriptions atop category pages, to ensure each page has unique content.

6. Product Review Pages

Many CMS systems come with built-in review functionality. Oftentimes, separate “review pages” are created to host all reviews for particular products, yet some (if not all) of the reviews are placed on the product pages themselves.

To avoid this duplicate content, these “review pages” should either be canonicalized to the main product page or set to “noindex,follow” via meta robots or X-robots tag. We recommend the first method, just in case a link to a “review page” occurs on an external website. That will ensure link equity is passed to the proper product page.

You should also check that review content is not duplicated on external sites if using third-party product review vendors.

7. WWW vs. Non-WWW URLs & Uppercase vs. Lowercase URLs

Search engines consider https://www.domain.com and https://domain.com different web addresses. Therefore, it’s critical that one version of URLs is chosen for every page on your eCommerce website.

We recommend 301-redirecting the non-preferred version to the preferred version to avoid these technically created duplicate URLs. Internal links that are manually added throughout your content should also point to the preferred version.

Uppercase and lowercase URLs need to be handled in the same manner. If both render separately, then search engines can consider them different. Find out how to redirect uppercase URLs to lowercase here.

8. Trailing Slashes on URLs

Similar to www and non-www URLs, search engines consider URLs that render with a trailing slash and without to be unique. For example, duplicate URLs are created in situations where “/page/” and “/page/index.html” (or “/page” and “/page.html”) render the same content. Technically speaking, these two pages aren’t even in the same directory.

Fix this problem by canonicalizing both to a single version or 301-redirecting one version to the other.

Internal Editorial Duplicate Content

Not all issues of duplicate content on a website are technical. Often, duplicate content occurs because the on-page content for different pages is either similar or duplicated.

The easiest fix for these issues? Write unique eCommerce copy for each individual page.

We’ll dive more into these different situations below.

1. Similar Product Descriptions

It’s easy to take shortcuts with product descriptions, especially with similar products. However, remember that Google judges the content of eCommerce websites similar to regular content sites — and taking shortcuts could lead to duplicate content product pages.

Product page descriptions should be unique, compelling, and robust — especially for mid-tier eCommerce websites looking to scale content production because they don’t have enough domain authority to compete.

Sharing copied content — short paragraphs, specifications, and other content — between pages increases the likelihood that search engines will devalue a product page’s content quality and, subsequently, decrease its ranking position.

Google recently reiterated that there is no duplicate content penalty for copy-and-pasted manufacturer product descriptions.

However, we still believe unique, attractive product descriptions are an important part of your eCommerce SEO strategy. In our opinion, manufacturer product descriptions likely won’t provide the same ability to rank as a unique, detailed product description.

2. Category Pages

Category pages typically include a title and product grid, which means there is often no unique content on these pages. While this isn’t technically a duplicate content issue, it could be beneficial to add unique descriptions at the top of category pages (not the bottom, where content is given less weight by search engines) describing which types of products are featured within the category.

There’s no magic number of words or characters to use. However, the more robust the category page content is, the better chance to maximize traffic from organic search results.

Keep in mind screen resolutions of your visitors. Any intro copy should not push the product grid below the browser fold.

3. Homepage

Home pages typically have the most amount of incoming link equity — and thus serve as highly rankable pages in search engines.

However, a homepage should also be treated like any other page on an eCommerce website content-wise. Always ensure that unique content fills the majority of home page body content; a homepage consisting merely of duplicated product blurbs offers little contextual value for search engines.

Avoid using your homepage’s descriptive content in directory submissions and other business listings on external websites. If this has already been done to a large extent, rewriting the home page descriptive content is the easiest way to fix the issue.

Off-Site Duplicate Content

Similar content that exists between separate eCommerce websites is a real pain point for digital marketers.

Let’s look at some of the most common forms of external (off-site) duplicate content that prevents websites from ranking as well as they could in organic search.

- Manufacturer Product Descriptions

- Duplicate Content on Staging, Development, or Sandbox Websites

- Third-Party Product Feeds (Amazon & Google)

- Affiliate Programs

- Syndicated Content

- Scraped Content

1. Manufacturer Product Descriptions

When eCommerce brands use product descriptions supplied by the manufacturer on their own product pages, they are put at an immediate disadvantage. These pages don’t offer any unique value to search engines, so big brand websites with more robust, higher-quality inbound link profiles are ranked higher — even if they have the same descriptions.

The only way to fix this is to embark upon the extensive task of rewriting existing product descriptions. You should also ensure that any new products are launched with completely unique descriptions.

We’ve seen lower-tier eCommerce websites increase organic search traffic by as much as 50–100% by simply rewriting product descriptions for half of the website’s product pages — with no manual link-building efforts.

If your products are very time-sensitive (they come in and out of stock as newer models are released), you should make sure that new product pages are only launched with completely unique descriptions.

You can also fill your product pages with unique content through:

- Multiple photos (preferably unique to your site)

- Enhanced descriptions with detailed insight into product benefits

- Product demonstration videos

- Schema markup

- User-generated reviews

2. Duplicate Content on Staging, Development, or Sandbox Websites

Time and time again, development teams forget, give little consideration to, or simply don’t realize that testing sites can be discovered and indexed by search engines. Fortunately, these situations can be easily fixed through different approaches:

- Adding a “noindex,nofollow” meta robots or X-robots tag to every page on the test site.

- Blocking search engine crawlers from crawling the sites via a “disallow: /” command in the “/robots.txt” file on the test site.

- Password-protecting the test site.

If search engines already have a test website indexed, a combination of these approaches can yield the best results.

3. Third-Party Product Feeds (Amazon & Google)

For good reason, eCommerce websites see the value in adding their products to third-party shopping websites. What many marketing managers don’t realize is that this creates duplicate content across these external domains. Even worse, products on third-party websites can end up outranking the original website’s product pages.

Consider this scenario: A product manufacturer with its own website feeds its products to Amazon to increase sales.

But serious SEO problems have just been created. Amazon is one of the most authoritative websites in the world, and its product pages are almost guaranteed to outrank the manufacturer’s website.

Some may view this simply as revenue displacement, but it clearly is going to put an SEO’s job in jeopardy when organic search traffic (and resulting revenue) plummets for the original website.

The solution to this problem is exactly what you would expect: Ensure that product descriptions fed to third-party sites are different from what’s on your brand’s website. We recommend giving the manufacturer description to third-party shopping feeds like Google, and writing a more robust, unique description for your own website.

Bottom line: Always give your own website the edge when it comes to authoritative and robust content.

4. Affiliate Programs

If your site offers an affiliate program, don’t distribute your own site’s product descriptions to your affiliates. Instead, provide affiliates with the same product feeds that are given to other third-party vendors who sell or promote your products.

For maximum ranking potential in search engines, make sure no affiliates or third-party vendors use the same descriptions as your site.

5. Syndicated Content

Many eCommerce brands host blogs to provide more marketable content, and some of them will even syndicate that content out to other websites.

Without proper SEO protocols, this can create external duplicate content. And, if the syndication partner is a more authoritative website, then it’s possible its hosted content will outrank the original page on the eCommerce website.

There are a few different solutions here:

- Ensure that the syndication partner canonicalizes the content to the URL on the eCommerce site that it originated from. Any inbound links to the content on the syndication partner’s website will be applied to the original article on the eCommerce website.

- Ensure that the syndication partner applies a “noindex,follow” meta robots or X-robots tag to the syndicated content on its site.

- Don’t partake in content syndication. Focus on safer channels of traffic growth and brand development.

6. Scraped Content

Sometimes, low-quality scraper sites steal content to generate traffic and drive sales through ads. Furthermore, actual competitors can steal content (even rewritten manufacturer descriptions), which can be a threat to the original source’s visibility and rankability in search engines.

While search engines have gotten much better at identifying these spammy sites and filtering them out of search results, they can still pose a problem.

The best way to handle this is to file a DMCA complaint with Google, or Intellectual Property Infringement with Bing, to alert search engines to the problem and ultimately get offending sites removed from search results.

An important caveat: The piece of content must be your own. If you’re using manufacturer product descriptions, you’ll have difficulty convincing search engines that the scraper site is truly violating your copyright. This might be a little easier if the scraper site displays your entire web page on their site, with clear branding of your website.

What is Thin Content for eCommerce?

Thin content applies to any page on your site with little to no content that doesn’t add unique value to the website or the user. It provides terrible user experiences and can get your eCommerce website penalized if the problem grows above the unknown threshold of what Google deems acceptable.

Examples

Here are some examples of scenarios where thin content could occur on an eCommerce site.

Thin/Empty Product Descriptions

As mentioned above, it’s easy for online brands to take shortcuts with product descriptions. Doing so, however, can limit your site’s organic search traffic and conversion potential.

Search engines want to rank the best content for their users, and users want clear explanations to help them with their purchasing decisions. When product pages only include one or two sentences, this helps no one.

The solution: Ensure that your product descriptions are as thorough and detailed as possible. Start by jotting down five to 10 questions a customer might ask about the product, write the answers, and then work them into the product description.

Test or Orphaned Pages

Nearly every website has outlying pages that were published as test pages, forgotten about, and now orphaned on the site. Guess who is still finding them? Search engines.

Sometimes these pages are duplicates of others. Ensure that all published and indexable content on your website is strong and provides value to a user.

Thin Category Pages

Marketers can sometimes get carried away with category creation. Bottom line: If a category is only going to include a few products (or none), then don’t create it.

A category with only one to three products doesn’t provide a great browsing experience. Too many of these thin category pages (coupled with other forms of duplicate content) can lead a site to be penalized.

The same solution applies here: Ensure that your category pages are robust with both unique intro descriptions and sufficient product listings.

Thin & Duplicate Content Tools

Discovering these types of content can be one of the most difficult and time-intensive tasks website owners or marketers undertake.

This final section of our guide will show you how to find duplicate content on your website with a few commonly used tools.

Google Search Console

Many content issues (including thin content issues) can be discovered through Google Search Console, which is free for any website.

Here’s how:

- Coverage: GSC will show a historical graph of the number of pages from your eCommerce site in its index. These pages will be marked “Valid” in the Coverage report. If the graph spikes upward at any point in time, and there was no corresponding increase in content creation on your end, it could be an indication that duplicate or low-quality URLs have made their way into Google’s index.

- URL Parameters: GSC will tell you when it’s having difficulty crawling and indexing your site. This legacy report (not available in Domain Properties) is great for identifying URL parameters (particularly for category pages) that could lead to technically created duplicate URLs. Use search operators (see below) to see if Google has URLs with these parameters in its index, and then determine whether it is duplicate/thin content or not.

- Coverage Errors: If your website’s soft 404 errors have spiked, it could indicate that many low-quality pages have been indexed due to improper 404 error pages being produced. These pages will often display an error message as the only body content. They may also have different URLs, which can cause technical duplicate content.

Moz Site Crawl Tool

Moz offers a Site Crawl tool, which identifies internal duplicate page content, not just duplicate metadata.

Duplicate content is flagged as a “high priority” issue in the Moz Site Crawl tool. Use the tool to export reported pages and make the necessary fixes.

Inflow’s CruftFinder SEO Tool

Inflow’s CruftFinder SEO tool helps you boost the quality of your domain by cleaning up “cruft” (junk URLs and low-quality pages), reducing index bloat, and optimizing your crawl budget.

It’s primarily meant to be a diagnostic tool, so use it during your audit process, especially on older sites or when you’ve recently migrated to a new platform.

Search Query Operators

Using search query operators in Google is one of the most effective ways of identifying duplicate and thin content, especially after potential problems have been identified from Google Search Console.

The following operators are particularly helpful:

site:

This operator shows most URLs from your site indexed by Google (but not necessarily all of them). This is a quick way to gauge whether Google has an excessive amount of URLs indexed for your site when compared to the number of URLs included in your sitemap (assuming your sitemap is correctly populated with all of your true content URLs).

Example: site:www.domain.com

inurl:

Use this operator in conjunction with the site: operator to discover if URLs with particular parameters are indexed by Google. Remember: Potentially harmful URL parameters (if they are creating duplicate content and indexed by Google) can be identified in the URL Parameters section of GSC. Use this operator to discover if Google has them indexed.

Example: site:www.domain.com inurl:?price=

This operator can also be used in “negative” fashion to identify any non-www URLs indexed by Google .

Example: site:domain.com -inurl:www

intitle:

This operator displays all URLs indexed by Google with specific words in the meta title tag. This can help identify duplicates of a particular page, such as a product page that may also have a “review page” indexed by Google.

Example: site:www.domain.com intitle:Maglite LED XL200

Plagiarism, Crawler & Duplicate Content Tools

A number of helpful third-party tools can identify duplicate and low-quality content.

Some of our favorite tools include:

Copyscape

This tool is particularly useful at identifying external “editorial” duplicate content.

Copyscape can look at specific content, specific pages, or a batch of URLs from a website and compare the content to the rest of Google’s index, looking for instances of plagiarism. For eCommerce brands, this can identify the worst-offending product pages when it comes to copied-and-pasted manufacturer product descriptions.

Export the data as a CSV file and sort by risk score to quickly prioritize pages with the most duplicate content.

Screaming Frog

Screaming Frog is very popular with advanced SEO professionals. It crawls a website and identifies potential technical issues that could exist with duplicate content, improper redirects, error messages, and more.

Exporting a crawl and segmenting issues can provide additional insight not provided by GSC.

Siteliner

Siteliner is a quick way to identify pages on your eCommerce site with the most internal duplicate content. This tool looks at how much unique content exists on each particular page in comparison to the repeated elements of each web page (header, sidebar, footer, etc.).

It’s especially helpful at finding thin content pages.

Getting Started

While the tools and technical tips recommended above can aid in identifying duplicate, thin, and low-quality content, nothing compares to years of experience with duplicate content issues and solutions.

Good news: As you identify these specific issues on your website, you’ll develop a wealth of knowledge that can be used in the future to continue cleaning up these issues — and preventing them in the future.

Start crawling and fixing today, and you’ll thank yourself for it tomorrow.

Don’t have the time or resources to tackle this SEO project? Rely on our team of experts to help clean up your website. Request a free proposal from our team anytime.

Hi,

Its a good article. My question is how to handle the following situation.

Let I have a product

Sony Headphones XYZ { This product Over-ear type, Water and dust proof and durable}

Lets I made a following posts on Headphone

1. Sports Headphone

2. Durable Headphones

3. Over Ear Headphone

Since product XYZ qualify for the above 3 posts so I would like to mention it in all 03 post, so no I have following questions

1 would it create a duplication of content

2 How to deal with it.

Note: kindly reply me on my email too

Best Regards

that isn’t the sort of “duplicate content” we’d be worry about. That is normal and expected use.

Hi!

Thank you so much for this guide

According to the study conducted by Moz, Google’s display titles max out (currently) at 600 pixels: https://moz.com/learn/seo/title-tag

In 2020, the meta description length is 920 pixels. This equates to 158 characters on average. Rather than cutting to characters, Google truncates long descriptions to the nearest whole word and adds an ellipsis.

Use a tool like SERP Pure optimization tool https://www.serpure.com to preview meta titles and meta descriptions and keep them under the limit.

Thank you and please keep up the great stuff!:)

Thanks for the great article. I have a quick question. While doing a SEO audit I received a warning stating all my product pages of the ecommerce website are Orphaned Pages. How do I fix this issue specifically for an ecommerce website with 1000 products? Is there a way to interlink product pages for Google Bots to not tag them as Orphaned?

It sounds like perhaps your internal linking is not in place to reach paginated category page results? I would also look at category structure, spreading a bit wider there (adding more cats and sub cats) could help provide the internal links necessary to your products. You should not orphan your product pages!

Hi,

What if you have a business that serves multiple locations, and you have duplicate content for each location EXCEPT for the location names?

So, let’s say you have a 500 word page about “Real Estate Law in Kansas City,” and then a nearly identical page (on another subdirectory or subdomain) on “Real Estate Law in St. Louis”. Then you change any and all geographic references.

Is this duplicate content? Will you be penalized?

Jonathan,

Our advice would be to make it unique content. For example how are the laws different in KC vs STL in regard to real estate. Are you a member of a bar association or other professional group in each?

Also you won’t be penalized per say, but you might not see your rankings go in the right direction either.

I have a question

Wha i case client has two websites with same domain (websitename) on 2 different ccTLd’s like .com and .com.au

These two websites are duplicate of each other.

Also, they are selling products on popular third part websites so everywhere the product descriptions are same which is duplicate content.

I plan to do separate keyword research and write on page content for .com and .com.au version of website landing pages.

Also, going to set target location in webmasters separately.

But what about products descriptions as there are a lot of products and on both websites as well as third party sites there is same description.

Adding an in depth content for products on own website is an option but as there are two personal websites so what will be the right approach.

Looking for help in this case.

Thanks

This is great stuff!

Hello Dan Kem,

I want to know if excerpts of posts on my site homepage is duplicate content?

Whether it hurts SEO?

Thank you!

Hello Harley,

If that is the “only” content on your homepage it is not good for SEO. However, if your homepage is sort of like a blog home page where you have excerpts from recent posts, that is fine so long as you have other content unique to that page.

OK, so I understand the importance of original, unique content. I also understand the tactic of keeping any ‘duplicate’ content hidden from search engines.

However, I have several ecommerce clients who are selling third party products which are ALSO sold by other merchants as well, so the product titles have been identified as ‘duplicate’. But to hide them defeats the purpose of having the products on the website at all.

Furthermore, some of the products are very basic and very similar in nature (eg. a ‘rose gold cake topper’ versus a ‘glitter cake topper’). So how reasonable is it to expect the client to generate original, unique content for each? But again, to hide the products makes no sense either.

What to do…?

It’s a simple as this. If your client sells the same products as everyone else who sources their stock from that distributor or manufacturer, what is to differentiate their page from everyone else’s? If you were Google, how would you know which ones to show in the search results if there are dozens or hundreds of virtually identical results?

If the client doesn’t care how those pages rank, then they shouldn’t be in the index. If the client does care, then they need unique titles and product descriptions.

I sympathize with the issue of writing copy for products that are very similar. We handle this in several different ways. One option is to combine the products on one page and allow the visitor to select their option via drop-down. Sometimes that doesn’t make sense for the situation so here are some other options: You could choose one version of the product to be “canonical” and point the rel =”canonical” tag the other product pages to that one. This way you only have to write unique copy for one of them. Of course we also have some clients where it makes sense to get creative and hiring a copywriter to highlight the small differences between the different versions in unique copy on each product page.

I work with a re seller website reselling market research reports published by market research companies. Our website is having 3 Lacs reports of different publishers. The report/product description usually provided by publishers are duplicate content. How to solve the issue?

I found this article extremely useful! You’ve highlighted for me several areas that I need to improve upon on my website. Thank you so much!

I’ve just read a book that I didn’t intend to. Thank you very much!

While trying to find a solution for my “problem”, I stumble upon this great article. Trying to understand it all, I hope you still want to answer this question: Our real estate board issues a monthly update on the market..I post this update on my site. Can I then use a canonical url pointing to the real estate board, even though the real estate board only places it on their site as a PDF? Or how should I go about this, as many other agents do the same. (without re-writing)

Hi Dan, Great article mate 🙂 Have their been any updates to this since last year and the changes happening with the google algorithm updates?

Hi David, yes there was recently a “quality” update launched by Google. More info here, here and here.

Glenn Gabe is seeing “thin content” as a big culprit. We don’t know anything directly from the source (Google), but the content quality issues appear to be similar to Panda. Everything in this article still applies. You only want to have high quality (non-duplicative, deep and authoritative) content indexed in Google. Hope this helps!

Excellent article. I have a question. I am building an ecommerce site with more than 1000 products. I simply do not have the time or the budget to put unique product descriptions and/or specifications for each product. I realise that if i did that I would certainly be in good standing with Google but realistically this is almost impossible. Do you have any suggestions?

This is a common scenario, David. It reminds me of a mantra that the Chief Digital Officer (of a publishing company that I worked for previously) had about eCommerce sites: “If you can’t write a unique description for this product tailored to our audience, then don’t put it on the store (website).” So, I would first suggest revisiting your belief of not having time/budget to ensure you have unique product descriptions and consider how to make the investment. Your product pages and category pages are the foundation of your store, and their quality will set the foundation for your success online. There are numerous copywriting services that can help you scale the copywriting. Consider checking out Copypress and more services with this Google query. Consider hiring an intern or two, or even family members, and having them rewrite the duplicate product descriptions. If you have a store with an unusually large amount of products (i.e. – 10,000 ), then consider rewriting/improving the top 10-50% that get the most organic search traffic (improve what’s already working to make it work better). As a last resort, you could set your product pages to “noindex,follow” (via a meta robots tag) if they don’t get any organic search traffic due to duplicate content (and your inability to improve them for various reason). Google’s own John Mueller has stated that if you don’t plan to improve low quality content, then either delete it or set it to noindex until you can improve it. In that case, you would focus your copywriting efforts on improving your category pages (optimizing meta titles, meta descriptions and on-page intro descriptions of 100 words for target keywords) and really building out your strategic content marketing efforts (blog posts, video, infographics, etc.) in order to create content about related topics people are searching for and increase your search engine visibility/discoverability in that manner. Hope this helps!

Excellent post, thanks for putting this info together in a clean and easy to read format. Bookmark’d for later use. 🙂

I’ve been looking for creative ways to check for duplicate content on very large websites, > 100,000 pages. Siteliner and Copyscape are too expensive to make it worthwhile. Any suggestions?

Hello Dan,

There are affordable tools to do checks for duplicate content internally. For example, you could use Screaming Frog to crawl your site and report on duplicate titles, descriptions and other issues typically caused by technical reasons for duplicate content. Other types of internal duplicate content (such as copied and pasted text or duplicate product descriptions on different products) are a little more difficult to catch without a full content audit.

With regard to external duplication, we typically start with a Copyscape check of 1,000 pages from various sections of the site, which gives us a general idea as to the scale and cause of external duplicate content problems. From there it tends to be an issue of fixing the problem more than identifying more pages with the symptom.

Thanks for this interesting blog post Dan. Greetings from India!

I’m currently up to my eyeballs in duplicate content so this post is a serious life saver!

Thanks for sharing!

When did robots.txt start supporting wild card entries?

> Note also that globbing and regular expression are not supported in either the User-agent or Disallow lines. The ‘*’ in the User-agent field is a special value meaning “any robot”. Specifically, you cannot have lines like “User-agent: *bot*”, “Disallow: /tmp/*” or “Disallow: *.gif”.

Source: https://www.robotstxt.org/robotstxt.html

James Google has been obeying wildcard directives in the robots.txt file for several years. You can verify this by using them in the robots.txt testing tool in Google Webmaster Tools. As for the correct syntax according to other organizations, they may not be technically supported. As SEOs we tend to think more about how syntax is treated by search engines. According to Google’s Developer Help page:

Great article indeed Dan. It’s a wonderful resource for ecommerce site owners and staff at SEO companies. A small correction though. Panda algorithm was launched in Feb of 2011 and not 2012. Thanks again.

Thanks for the typo fix Vivek. I will update Dan’s post now. We’re glad you enjoyed it and are proud to have him on our team.

Great post Dan, definitely worth the read and worth sharing.

Definitely the most thorough yet concise article on duplicate content that I have read. Bookmarked and shared.

Superb in depth post Dan – Excellent resource.

That’s an awesome list and a even better check list for all Webmasters in eCommerce. Thank you very much for sharing! Greetings from Switzerland!

Dan this is an Epic post. It is tough to cover so much ground at once without sacrificing depth, but you’ve managed to strike that balance here. Good stuff!

Great in-depth post, Thanks!